In the tech world, there are conferences — and there are events.

With more than 60,000 people attending in person and another 400,000 watching online, AWS re:Invent is one of the year’s biggest draws for builders, developers and anyone who seeks to stay ahead of the game with generative AI.

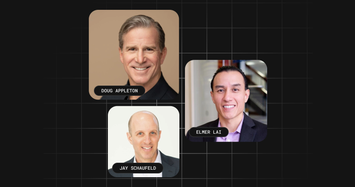

As an official partner of AWS, SingleStore was both a sponsor and an avid participant. You may have seen our mobile billboard driving around Las Vegas. We also hosted a booth, took part in the AWS genius bar, held a mini-golf party and delivered a talk about building multi-agent RAG systems with Amazon Bedrock and SingleStore.

AWS broke a fair amount of news at this year’s event. Their announcements were aimed at improving performance and scalability, echoing the kind of product advances we unveiled at SingleStore Now earlier this year. We view this as a validation of the need to create a single interface for all data, and confirmation of the three principles that have always guided us at SingleStore: speed, scale and simplicity.

Let’s consider some of the biggest news and what it means for developers like you who are building enterprise AI applications.

Amazon Aurora DSQL

The first database-related announcement was the release of Aurora Distributed SQL (DSQL). According to the announcement, the DSQL is a serverless, distributed SQL database that allows applications to remain always available, with virtually unlimited scalability and zero infrastructure management. If you have been around in the software development world for a bit, this may remind you of another distributed SQL database: CockroachDB.

Why does it matter?

Even in the world of AI – especially in the world of AI – horizontally scalable and distributed SQL matters more than ever. In fact, this announcement also signals that more companies aren’t just looking for scalable distributed SQL databases, but for those that also provide strong consistency.

Amazon S3 enhancements

The much more popular Amazon S3 also saw some significant updates, like improved performance and scalability for data lakes. The introduction of S3 Table Buckets is particularly noteworthy; these are optimized for Apache Iceberg tables, offering three times the query performance and 10 times more transactions per second than standard S3 buckets.

In addition, Amazon S3 now supports up to one million buckets per AWS account, a substantial increase from the previous limit of 100. This expansion provides greater flexibility in organizing and managing large volumes of data.

Why does it matter?

The use of Iceberg is catching fire (if that’s physically possible) and AWS is onboard as well. This is a clear indication of where data lakes and data warehouses are moving – to a place where the same data can be interchangeably used by different databases and data platforms. Fun fact: Earlier this year, SingleStore announced support for Iceberg tables, so you can run real-time analytics on your data lake and warehouse data.

SageMaker Lakehouse

Part of the next generation of Amazon SageMaker, the SageMaker Lakehouse is a unified platform for data management and analytics – integrating data across Amazon S3 data lakes, Amazon Redshift data warehouses and other sources into a single platform. It supports SQL analytics, big data processing, model development and generative AI applications, and because it’s compatible with Apache Iceberg open standards, users can query data regardless of its physical storage location. The platform also includes built-in governance capabilities that ensure secure access to data across different analytics tools.

Last but not least, SageMaker Lakehouse supports zero ETL integrations with popular SaaS applications and databases like Amazon Aurora MySQL and PostgreSQL. It also offers the ability to use third-party data for analytics and ML, without complex pipelines.

Why does it matter?

Increasingly, large companies are demanding seamless access to all organizational data through familiar AI and ML tools or query engines, instead of making multiple retrieval calls across multiple point databases. But, did you notice what is missing? It does not have real-time data (yet).

In addition to the above, AWS announced an enterprise search service called AWS Kendra. How is it different from OpenSearch? Well, unlike OpenSearch which is primarily a search and analytics engine based on Elasticsearch, Kendra allows natural language queries and semantic search.

What it all means

This year’s AWS re:Invent validates what we have often said – data has finally become the true differentiator for AI. As LLMs become a commodity and enterprises are taking their AI applications to production, all providers are now rushing to provide a single interface to all data. They’re unlocking data in lakehouses through Iceberg for real-time analysis and at speed and scale, and ensuring AI apps have the freshest data ensuring accuracy.

SingleStore has been on that path for quite some time now, offering millisecond response times across petabytes of data across all data types. Earlier this year, we strengthened our hybrid search capabilities and added support for Iceberg tables, and introduced SingleStore as a SPCS partner on Snowflake.

It’s gratifying to see our formula of speed, scale and simplicity across all data types being strongly validated by the industry’s major players, including AWS. Click here to learn more about our partnership with AWS.