Welcome, DynamoDB x Rockset developers! This blog will show you how to easily set up CDC from your AWS DynamoDB database to SingleStore using DynamoDB streams and a Lambda function.

Step 1. Enable DynamoDB streams

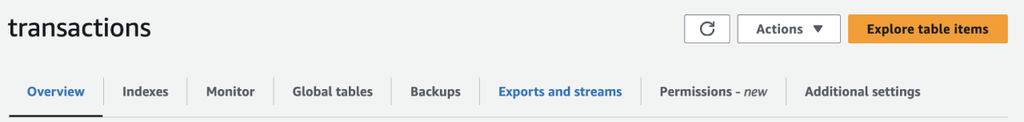

Here are the steps to enable DynamoDB streams on a given table:

- Open the DynamoDB console

- Choose tables

- Choose the table you want to set up the stream for

- Under “Exports and streams,” choose DynamoDB stream details

- Choose turn on

- For view type, you’ll be prompted with a range of options. Choose the one that meets your requirements

- Select “Turn on stream”

Once complete, your DynamoDB streams box should look like this.

Step 2. Set up the SingleStore database

Now that we have everything set up on the DynamoDB side, let’s set up our SingleStore Helios® environment. For this blog, we’ll assume you’ve already created a workspace group to determine your AWS region, created a workspace in SingleStore Helios and granted IP access between the workspace group and your AWS account.

1CREATE DATABASE demo;2USE demo;3DROP TABLE IF EXISTS transactions;4CREATE TABLE transactions (5 json_col JSON6);

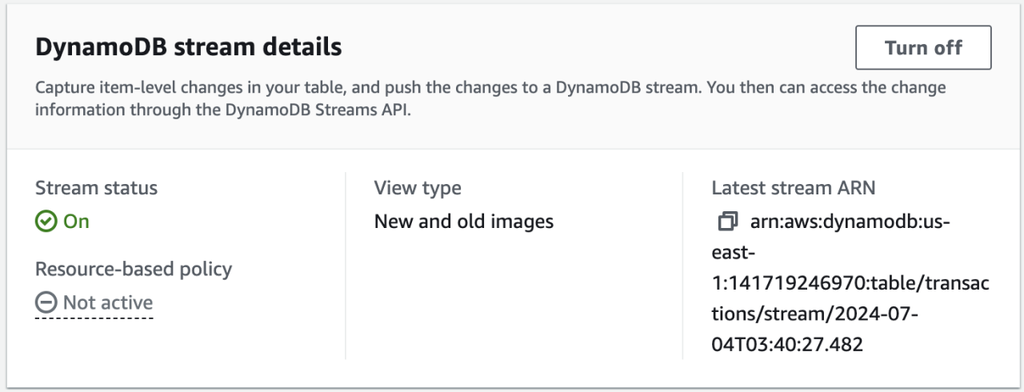

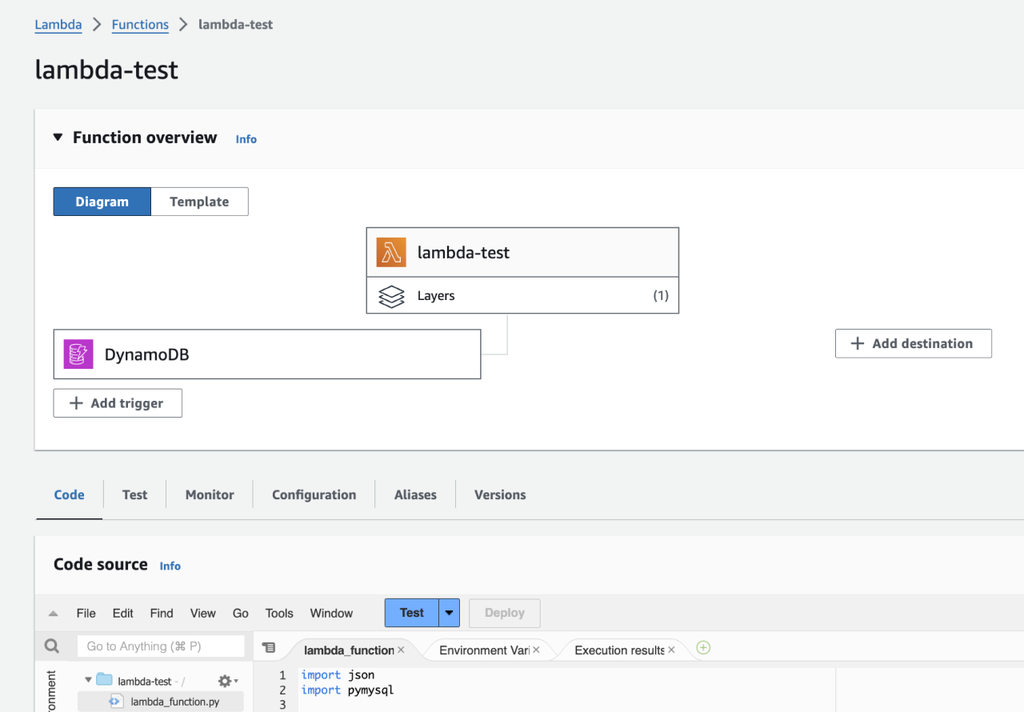

Step 3. Create a Lambda function to consume the stream and write to SingleStore

Now that we have the DynamoDB stream set up, we need to create a Lambda function to be invoked and executed each time an event is pushed into the DynamoDB stream. To create the Lambda function, navigate to the Lambda console and select ‘Create function.’ From there you will be taken to a window like in the screenshot here.

In our example, we’re using the ‘author from scratch’ creation method and have used default options (except for selecting Python 3.12 as our programming language).

In the newly created Lambda function console, scroll down to the code section. Here, you can write the code to be executed each time new data from the DynamoDB stream invokes the Lambda function.

We’re going to keep our example pretty simple and load the JSON payload directly to a JSON column in SingleStore — so there is no need for data transformations. We can define how the lambda function pushes from Dynamo with the following python code:

1import json2import pymysql3 4# SingleStore connection details5SINGLESTORE_HOST = 'singlestore_host_connection_string'6SINGLESTORE_USER = 'user_name'7SINGLESTORE_PASSWORD = 'user_password'8SINGLESTORE_DB = 'db_name'9 10def connect_singlestore():11 return pymysql.connect(12 host=SINGLESTORE_HOST,13 user=SINGLESTORE_USER,14 password=SINGLESTORE_PASSWORD,15 db=SINGLESTORE_DB,16 db=SINGLESTORE_DB,17 cursorclass=pymysql.cursors.DictCursor18 )19 20 21def lambda_handler(event, context):22 23 for record in event['Records']:24 print(record)25 if record['eventSource'] == 'aws:dynamodb':26 print(event)27 connection = connect_singlestore()28 print("Connected to SingleStore")29 event_name = record['eventName']30 if event_name == 'INSERT':31 32 try:33 with connection.cursor() as cursor:34 35 json_str = json.dumps(record['dynamodb']['NewImage'])36 sql = """INSERT INTO transactions (json_col) VALUES 37('{}')""".format(json_str)38 39 cursor.execute(sql)40 connection.commit()41 42 except Exception as e:43 print(f"Error: {str(e)}")44 finally:45 connection.close()46 print("Connection closed")47 48 elif event_name == 'REMOVE':49 50 delete_key = record['dynamodb']['Keys']['id']['N']51 print(delete_key)52 try:53 with connection.cursor() as cursor:54 55 sql = """DELETE FROM transactions WHERE json_col::id::N 56= {};""".format(delete_key)57 cursor.execute(sql)58 connection.commit()59 except Exception as e:60 print(f"Error: {str(e)}")61 finally:62 connection.close()63 print("Connection closed")64 65 elif event_name == 'MODIFY':66 67 update_key = record['dynamodb']['Keys']['id']['N']68 print(update_key)69 print(record)70 update_value = 71record['dynamodb']['NewImage']['total_cost']['N']72 print(update_value)73 try:74 with connection.cursor() as cursor:75 76 sql = """UPDATE transactions SET 77json_col::total_cost::N = '"{}"' WHERE json_col::id::N = 78'"{}"';""".format(update_value, update_key)79 80 cursor.execute(sql)81 connection.commit()82 83 except Exception as e:84 print(f"Error: {str(e)}")85 finally:86 connection.close()87 print("Connection closed")88 89 else:90 continue 91 92 else:93 continue94 95 return {96 'statusCode': 200,97 'body': json.dumps('Data processed successfully')98 }

This Lambda function handles new inserts, updates and deletes from the DynamoDB table and pushes the changes into our SingleStore table. We’ve stuck with basic update logic for our demo purposes, but you can add any logic in your Lambda function to handle application-specific scenarios.

Note, if you need to import any libraries which are not pre-installed in the Lambda environment, you’ll need to create a Lambda Layer to manage dependencies with the function.

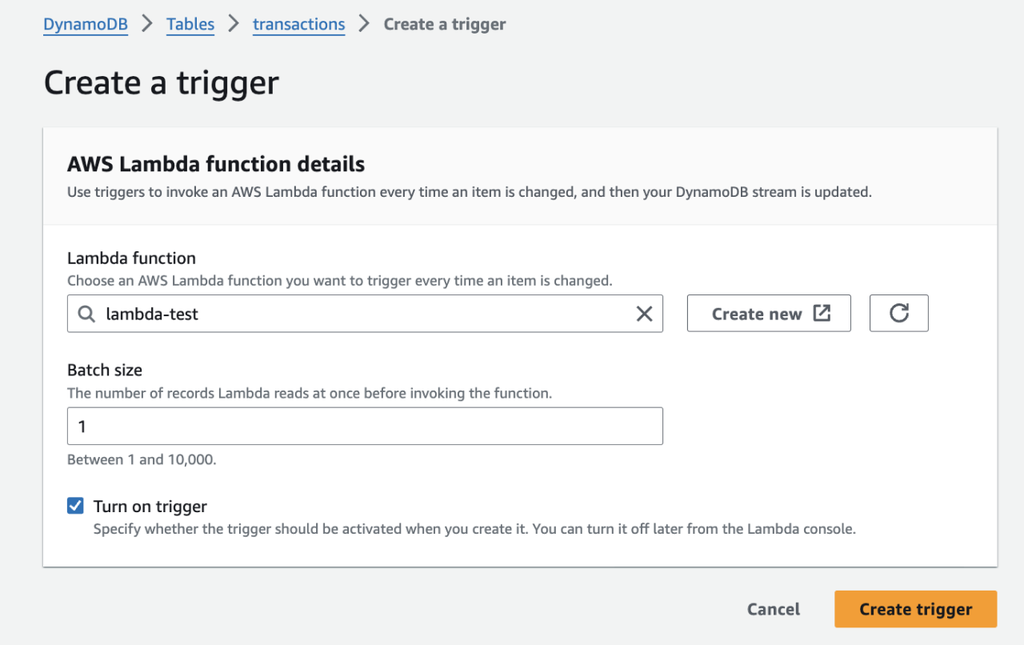

Step 4. Connect your DynamoDB stream to the Lambda function

The final step on the DynamoDB side is to create a trigger connecting your DynamoDB stream to the newly created Lambda function. To do this, navigate back to the table where you set the stream up and at the bottom of the ‘Exports and Streams’ tab, there will be a ‘triggers’ box. Select ‘Create trigger’ and fill in the next page.

Now our Lambda will be invoked by CDC events in our Dynamo stream.

Step 5. Test out the connection from DynamoDB to SingleStore

Now we get to the fun part! Let’s test out what we’ve built with some data. To do this, we’re going to run three separate Python scripts, one for each operation (insert, update and delete).

Insert data

First, let’s test inserting data into DynamoDB to see if it flows through to SingleStore. The following script generates synthetic transaction data and writes it to DynamoDB — we are generating 10 synthetic transactions and inserting them into DynamoDB.

1import boto32import json3import time4from random import randint, uniform5from decimal import Decimal6 7# Initialize DynamoDB client8dynamodb = boto3.resource('dynamodb', region_name='us-east-1') 9table_name = 'transactions'10table = dynamodb.Table(table_name)11 12def generate_transaction(i):13 transaction_id = i14 total_cost = Decimal(str(round(uniform(10.0, 100.0), 2)))15 items_purchased = [randint(1, 10) for _ in range(randint(1, 5))]16 transaction_at = int(time.time())17 18 return {19 'id': transaction_id,20 'total_cost': total_cost,21 'transaction_at': transaction_at22 }23 24def write_to_dynamodb(transaction):25 response = table.put_item(26 Item={27 'id': transaction['id'],28 'total_cost': transaction['total_cost'],29 'transaction_at': transaction['transaction_at']30 }31 )32 print(f"Successfully inserted transaction with id: {transaction['id']}")33 return response34 35# Generate and write synthetic transactions36for i in range(10): 37 transaction_data = generate_transaction(i)38 print(transaction_data)39 write_to_dynamodb(transaction_data)

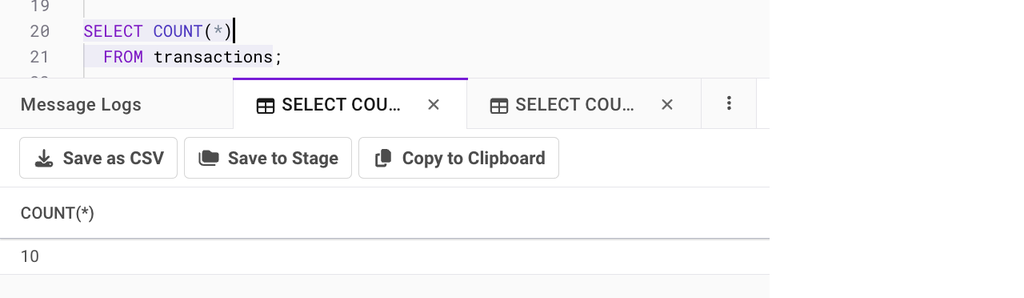

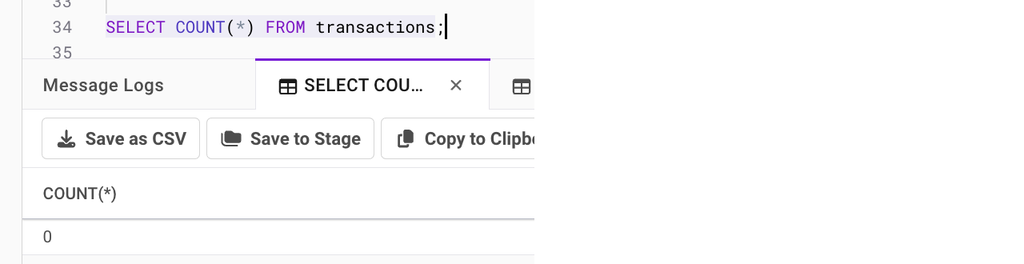

After running the script, confirm all the transactions have been replicated to SingleStore by doing a count on the transactions table.

1SELECT COUNT(*) FROM TRANSACTIONS;

Great! So we’ve got inserts working. Let’s inspect what the data looks like before moving onto updates.

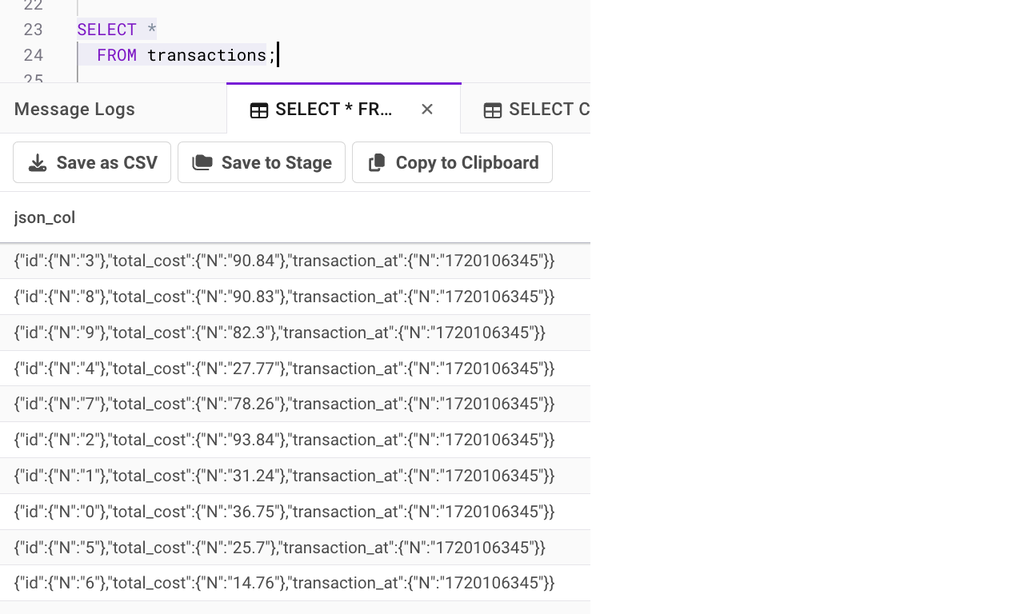

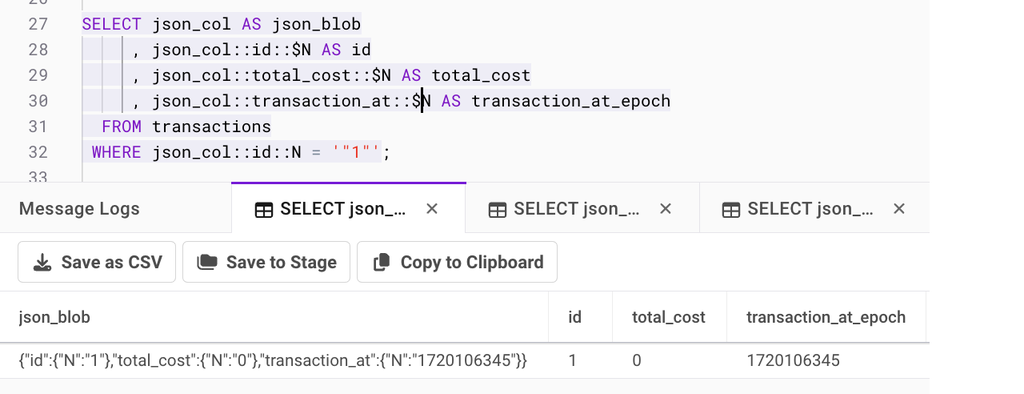

SELECT * FROM transactions

The data is being stored in JSON blobs. We can parse out individual key-values by using :: operator, accessing them in our desired SQL format.

Note, If you wanted to pull out specific key value pairs and have them stored in their own column when writing to SingleStore, a great way to do this is via SingleStore persisted computed columns. Alternatively, you could parse them out in the Lambda function and insert directly into individual columns.

Update

To test if updates to DynamoDB are flowing through, let’s update the total_cost value to 0 where the id value is 1. The following script makes this change to DynamoDB.

1import boto32import json3import time4from random import randint, uniform5from decimal import Decimal6from botocore.exceptions import ClientError7 8# Initialize DynamoDB client9dynamodb = boto3.resource('dynamodb', region_name='us-east-1') 10table_name = 'transactions'11table = dynamodb.Table(table_name)12 13# Specify the table14table = dynamodb.Table('transactions')15 16def update_transaction(transaction_id, total_cost=None, items_purchased=None, 17transaction_at=None):18 # Build the update expression and attribute values dynamically19 update_expression = "SET"20 expression_attribute_values = {}21 expression_attribute_names = {}22 23 if total_cost is not None:24 update_expression += " #tc = :total_cost,"25 expression_attribute_values[":total_cost"] = total_cost26 expression_attribute_names["#tc"] = "total_cost"27 28 # Remove trailing comma29 update_expression = update_expression.rstrip(',')30 31 try:32 response = table.update_item(33 Key={'id': transaction_id},34 UpdateExpression=update_expression,35 ExpressionAttributeValues=expression_attribute_values,36 ExpressionAttributeNames=expression_attribute_names,37 ReturnValues="UPDATED_NEW"38 )39 print("UpdateItem succeeded:")40 print(response)41 except ClientError as e:42 print(e.response['Error']['Message'])43 44 45# Example usage46if __name__ == '__main__':47 transaction_id = 148 new_total_cost = Decimal(0)49 50 update_transaction(transaction_id, new_total_cost)

After successfully running the script, let’s check if the update flowed through to SingleStore.

Great, now we’ve inserts and updates working!

Delete

Finally, let’s delete all the records to clean up our tables. The following script loops through all the records in DynamoDB and deletes them.

1import boto32import json3import time4from random import randint, uniform5from decimal import Decimal6 7# Initialize DynamoDB client8dynamodb = boto3.resource('dynamodb', region_name='us-east-1') 9table_name = 'transactions'10table = dynamodb.Table(table_name)11 12def delete_all_items():13 # Scan the table to get all items14 scan = table.scan()15 with table.batch_writer() as batch:16 for each in scan['Items']:17 batch.delete_item(18 Key={19 'id': each['id'] # Replace with your table's primary key20 }21 )22 print(f"Deleted {len(scan['Items'])} items from {table_name}")23 24# Main execution25if __name__ == '__main__':26 delete_all_items()

After running the script, confirm the records were deleted in SingleStore:

We’ve now set up inserts, updates and deletes and are one step closer to migrating our application completely.

Conclusion

Leveraging DynamoDB streams and a Lambda function allows you to quickly set up CDC from DynamoDB into SingleStore in a manner that might feel familiar to Rockset developers. This will likely allow for a quicker migration before potentially exploring SingleStore pipelines from Kafka that can infer schema.

However you choose to ingest data from DynamoDB to find your Rockset alternative, SingleStore allows you to deliver real-time analytics and contextualization behind your application that can handle complex parameterized queries. SingleStore is purpose-built to handle real-time transactions, analytics and search — and we’ve done this repeatedly at enterprise scale.

Ready to start your Rockset migration? Chat with a SingleStore Field Engineer today.

-Search_feature.png?height=187&disable=upscale&auto=webp)