.png?width=736&disable=upscale&auto=webp)

A range of open-source and commercial tools can provide Extract, Load and Transform (ELT) capabilities. These tools provide source and destination connectors and can use automatic data format conversion. This article uses a commercial ELT tool, Hevo Data, to replicate a MongoDB Atlas database to Singlestore Helios.

In a previous article, we used open-source Airbyte to create an ELT pipeline between SingleStoreDB and Apache Pulsar. We have also seen in another article several methods to ingest JSON data into SingleStoreDB. In this article, we'll evaluate a commercial ELT tool called Hevo Data to create a pipeline between MongoDB Atlas and Singlestore Helios.

Switching to SingleStoreDB has many benefits, as shared by Rimdian (formerly Captain Metrics) in a webinar detailing why they moved away from MongoDB.

SingleStoreDB Configuration

We’ve previously covered the steps required to create a free Singlestore Helios account. We'll use Hevo Demo Group as our Workspace Group Name, and hevo-demo as our Workspace Name. We'll make a note of our password and host name. Finally, we'll create a new database using the SQL Editor:

CREATE DATABASE hevo_demo;MongoDB Configuration

We'll use the MongoDB Atlas shared cluster deployment on AWS. This will give us a three-node cluster (one primary and two secondary). Once the cluster is deployed, we'll load the sample dataset. We'll also create a user called hevo and assign readAnyDatabase privileges to this user for our initial tests.

Hevo Data Configuration

1. Configure Source

We'll search and choose MongoDB Atlas as the source.

We'll fill in the Configure your MongoDB Atlas Source form as follows:

- Pipeline Name: MongoDB Atlas Source

- General Connection Settings:

- Select Paste Connection String

- Connection URI: mongodb+srv://hevo:<password>@<cluster>

- Select an Ingestion Mode: Change Streams

- Advanced Settings: Disable Load All Databases and select sample_restaurants from the list

We'll replace the <password> and <cluster> with the values from MongoDB Atlas.

Several Hevo Data IP addresses are also listed, and should be added to the IP Access List in MongoDB Atlas.

We'll use the TEST CONNECTION button (the connection should be successful).

Next, we'll click TEST & CONTINUE.

2. Select objects

On the next page, we'll check (✔) All objects selected and click CONTINUE.

3. Configure destination

We'll search and choose MySQL as the destination.

We'll fill in the Configure your MySQL Destination form with the following information:

- Destination Name: SingleStoreDB Destination

- Database Host: <host>

- Database Port: 3306

- Database User: admin

- Database Password: <password>

- Database Name: hevo_demo

We'll replace the <host> and <password> with the values from our Singlestore Helios account.

Several Hevo Data IP addresses are also listed, and these should be added to the Inbound IP Allowlist in the Singlestore Helios Firewall. We'll use the TEST CONNECTION button, and the connection should be successful.

Next, we'll click SAVE & CONTINUE.

4. Final settings

We'll use Auto Mapping and Replicate JSON fields to JSON columns. Next, we'll click CONTINUE.

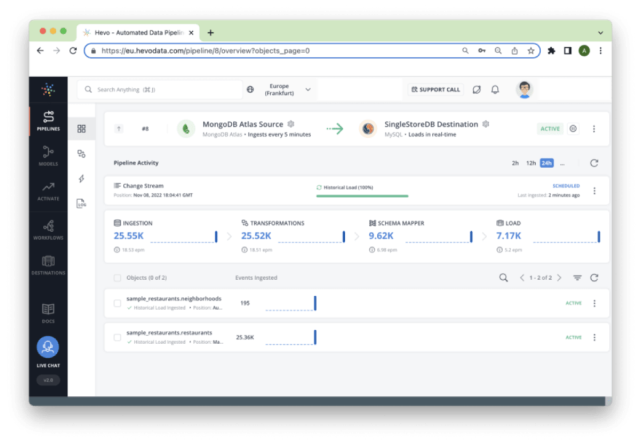

The pipeline should start running shortly afterwards, as shown in Figure 1.

Note that it will take some time for the pipeline to complete. In SingleStoreDB two tables should be created — restaurants and neighborhoods — to match the two collections in MongoDB.

We can quickly check each table in SingleStoreDB to view what the Hevo Data pipeline has generated. Here is an example from the restaurants table:

*************************** 1. row ***************************

_id: 5eb3d669b31de5d588f4754d

address:

{"building":"7015","coord":[-74.026271,40.634328],"street":"3

Avenue","zipcode":"11209"}

borough: Brooklyn

cuisine: Ice Cream, Gelato, Yogurt, Ices

grades:

[{"date":1401494400000,"grade":"A","score":10}]

name: Uncle Mikes Ices

restaurant_id: 50001556

__hevo__database_name: sample_restaurants

__hevo_id:

ef392c2550f086e4e22680e2b9753383ff6e9ae0747c5634b1c4188978815eaf

__hevo__ingested_at: 1667930442414

__hevo__marked_deleted: 0

__hevo__source_modified_at: NULLAnd here is a truncated example from the neighborhoods table:

*************************** 1. row ***************************

_id: 55cb9c666c522cafdb053ab3

geometry:

{"coordinates":[[[-73.74016951762782,40.73848189750691],[-73.741

44628592867,40.736938746807425],[-73.74151636839602,40.736860361

04047],

...

[-73.74055585492765,40.73858718262385],[-73.74029081629884,40.73

851667014021],[-73.74016951762782,40.73848189750691]]],"type":"P

olygon"}

name: Oakland Gardens

__hevo__database_name: sample_restaurants

__hevo_id:

b75f4ff0eddfff963f8a983274c856cf1d433806a536fddf36bfe1bb8c9def76

__hevo__ingested_at: 1667930426142

__hevo__marked_deleted: NULL

__hevo__source_modified_at: NULLEventually, there should be 25,359 rows in the restaurants table and 195 rows in the neighborhoods table. These numbers match the numbers from MongoDB Atlas.

Summary

This short article has shown one example of a database automatically replicated to SingleStoreDB using a commercial ELT platform. Overall, the results have been very promising. Auto Mapping worked successfully, and all the data was copied from MongoDB Atlas to Singlestore Helios.

Interested in how you can drive faster analytics for JSON data on MongoDB — without any code changes or major schema migrations? Join us on May 18 for a live product launch, where we’re introducing a way for you to do just that. Reserve your spot here.

And in the meantime, you can get started building with SingleStoreDB today.