When managing large quantities of data, MongoDB® is a commonly used NoSQL database for storing vast amounts of unstructured data in a document-oriented format.

While the data may be stored in MongoDB, it needs a separate software layer to implement fast search functionality — like ElasticSearch. A search and analytics engine, Elasticsearch provides solutions for indexing and analyzing data across both structured and unstructured formats.

Many organizations rely on both databases since Elasticsearch excels in search-related operations, while MongoDB offers efficient scalability for handling large and complex data sets. However, they are independent of one another and must be properly introduced. This introduction has proven to be both difficult and complex, which only gets worse when attempting to handle intricate data. In this blog, we’ll take a look at the most common ways companies can integrate the two systems as well as an alternative that can save you time and money — SingleStore Kai™.

Option 1: Integrating MongoDB and ElasticSearch

Method 1: MongoDB Connector

MongoDB Connector is a data-export tool developed by MongoDB with Python. For small to medium workloads, it can handle continuous synchronization of your secondary dataset on its own.

Pros

- Fairly easy to set up

- Real-time data synchronization via replications of the MongoDB operations log (oplog)

Cons

- Can’t efficiently scale past a small-medium size workload

- Complexity increases linearly with the complexity of your MongoDB schema

- Not flexible out of the box, requires a high degree of product knowledge

Here’s a crash course to setup your first connector:

- Install the MongoDB Connector tool with pip

pip install mongo-connector - With Elasticsearch installed, download your version’s corresponding doc manager

1pip install mongo-connector[elastic5]2# Match ‘5’ with your version of ES, and supplement the parameter with -aws for the Amazon version of Elastic. Example: mongo-connector[elastic5-aws]

- To start the connector, you need a config.json file. The following is a general outline to do so, but please tune your settings to suit your needs.

1{2 "mainAddress": "localhost:27017",3 "oplogFile": "oplog.timestamp",4 "noDump": false,5 "batchSize": -1,6 "verbosity": 2,7 "continueOnError": true,8 "logging": {9 "type": "file",10 "filename": "mongo-connector.log"11 },12 "authentication": {13 "adminUsername": "yourAdminUsername",14 "password": "yourPassword"15 },16 "fields": {17 "includeFields": [18 "field1",19 "field2"20 ],21 "excludeFields": [22 "field3"23 ]24 },25 "namespaces": {26 "include": [27 "db1.collection1",28 "db2.collection2"29 ],30 "exclude": [31 "db3.collection3"32 ]33 },34 "target": {35 "url": "http://localhost:9200",36 "index": "myindex",37 "docType": "mytype"38 }39}

- Once your config.json file is complete, you can run this command to get the connection up and running!

1mongo-connector -c config.json

Method 2: LogStash

Elasticsearch runs on something called the ELK stack. This stack houses Elasticsearch, Kibana, Beats and Logstash, which all work together to provide companies with search and analytics capabilities.

Pros

- Logstash and its ingest pipelines have inherent flexibility and support auto-indexing

- The ELK community has constructed a wide range of document support to aid in configuration

Cons

- Configuring logstash has a steep learning curve

- Syncing data from MongoDB to logstash will come with latency

Logstash

- Download logstash with the instructions found here.

- Install the MongoDB input plugin:

1bin/logstash-plugin install logstash-input-mongodb

- Create a logstash configuration file ‘logstash.conf’ and give it the following pathing:

1input {2 mongodb {3 uri => 'mongodb://localhost:27017/mydb'4 placeholder_db_dir => '/path/to/logstash-mongodb/'5 placeholder_db_name => 'logstash_sqlite.db'6 collection => 'mycollection'7 batch_size => 50008 }9}10 11output {12 elasticsearch {13 hosts => ['localhost:9200']14 index => 'myindex'15 }16}

- Run LogStash with the previously created config file:

1bin/logstash -f logstash.conf

Method 3: Custom scripts

Many developers have resorted to their own solutions using the ElasticSearch libraries, namely in Node.js and Python. The following program will fetch data from your database and index it into Elasticsearch.

Pros

Of course, a custom solution will yield full control over what happens to your data

- Adding new features is as simple as creating a new function

Cons

- A custom solution would require expertise in the ElasticSearch and MongoDB languages

- Intense levels of maintenance throughout the duration of your solution

- Very little reusability as each solution is custom-made

1from pymongo import MongoClient2from elasticsearch import Elasticsearch3from elasticsearch.helpers import bulk4 5# Connect to MongoDB6mongo_client = MongoClient("mongodb://localhost:27017/")7mongo_db = mongo_client["mydb"]8mongo_collection = mongo_db["mycollection"]9 10# Connect to Elasticsearch11es = Elasticsearch(["http://localhost:9200"])12 13# Fetch data from MongoDB and index into Elasticsearch14def fetch_and_index():15 actions = [16 {"_index": "myindex", "_id": str(doc["_id"]), "_source": doc}17 for doc in mongo_collection.find()18 ]19 bulk(es, actions)20 21fetch_and_index()

Option 2: Integrating MongoDB and SingleStore Kai™

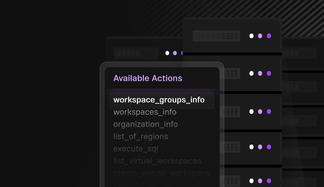

If you’re looking for a more streamlined solution, SingleStore Kai is an API that targets and corrects the common drawbacks of apps built on MongoDB.

To use SingleStore Kai, all you have to do is spin up a SingleStore workspace and change your endpoint. Then, all of your queries can be executed in the already-familiar Mongo syntax. No new languages, no lengthy and stressful configuration. Both OLAP and OLTP workloads are possible with speeds of up to 900x faster than MongoDB, with full-text search over standard text, as well as JSON data. It can be scaled up or down at will without sacrificing performance, and can run in any cloud environment.

Benefits of SingleStore Kai and MongoDB vs. an Elastic integration:

| Complexity | Single database solution | Scalability | |

| SingleStore Kai™ | Low: Only Mongo query language, setup in three easy steps | Yes, with OLAP + OLTP support | SingleStore Kai can handle petabyte-scale data |

| Elasticsearch + MongoDB | High: Several query languages and hard to learn | No, data is copied via your MongoDB oplog into Elasticsearch for OLAP workloads | Elasticsearch loses performance as more nodes are added to a cluster |

The SingleStore Kai benchmarks are conveniently listed and explained here. Try it free today.