Kubernetes is widely discussed, and widely misunderstood. We here at SingleStore are fans of Kubernetes and the stateful services that have been added to it since its launch, and we’ve used them to build and manage Singlestore Helios. So we are taking the time to explain Kubernetes’ architecture, from the top down, to help you decide whether using Kubernetes is right for you.

What is Kubernetes?

Kubernetes is an open-source orchestrator originally built by Google, now owned and managed by the Cloud Native Computing Foundation (CNCF). Kubernetes is famous for extensibility and for no-downtime rolling updates. Most public and private cloud platforms have push-button mechanisms to spin up a Kubernetes cluster, pooling provisioned cloud resources together into a single platform that can be controlled together.

Kubernetes has made a name for itself as the cloud-native platform for running containers. As a user unfamiliar with this platform, you can find this guide helpful in getting acquainted with the various components of Kubernetes, and getting started running your containers here. As your needs grow, from stateless services to stateful data stores or custom installations, Kubernetes offers extension points that allow you to replace pieces with community extensions or custom-built components.

Kubernetes is quite the weird mouthful. As noted by Dan Richman in Geekwire, “Kubernetes (‘koo-burr-NET-eez’) is the no-doubt-mangled conventional pronunciation of a Greek word, κυβερνήτης, meaning ‘helmsman’ or ‘pilot.’ Get it? Helping you navigate the murky waters of cloud computing…” Because it’s such a wonderfully long word, we often shorten it to “k8s,” which you can think of as “k followed by 8 letters and then s.”

Kubernetes lives in the family of orchestrators, but it’s hardly the only one. Before diving into the specifics of this orchestrator, let’s look at what an orchestrator is in general.

Note. We at SingleStore are great believers in Kubernetes. We use Kubernetes to manage Singlestore Helios, our always-on, elastic, managed SingleStore service in the cloud. (You may have noted how our description of Singlestore Helios resembles descriptions of the capabilities of Kubernetes.) We had to develop a beta Kubernetes Operator for SingleStore to do so. But, even with that effort, we were able to develop and release Singlestore Helios much more quickly, and with a much smaller team, than without it.

What is an Orchestrator?

Kubernetes is an example of an orchestrator — machinery for bringing a bunch of machines together to act as a single unit. Imagine we collect a dozen or a hundred machines. Though we could connect to each one remotely to configure it, we’d rather just tell the orchestrator an instruction and have it control each machine for us.

I’ll give the orchestrator an instruction. “Run three copies of my web server, I don’t care how.” (Granted we’ll likely specify many more details than this.) The orchestrator can take these instructions, find free resources on a machine (or machines) of its choosing, and launch the containers. We give the orchestrator our desired state, and let it choose how to ensure that desired state is met. Perhaps we give the orchestrator additional instructions to ensure the webserver is running across availability zones or in multiple regions. We don’t need to specify which machine does what, only that the work is done.

Because the orchestrator is in control of the machines, the orchestrator can also monitor the health of the system. What if one webserver container crashes? Or what if the machine it’s running on fails, or needs to upgrade? The orchestrator notices this failure, and takes corrective action. It’ll schedule a new copy of the webserver on other hardware, and if possible, only then take down the old system. … and we get to stay asleep. Which machine did it choose? I don’t know … and I don’t need to know. I need only know that my webserver is running and that traffic is routing into it.

Examples of Orchestrators

There are many different brands of orchestrators, both open-source and proprietary. Examples include Kubernetes, Docker Swarm, Azure Service Fabric, Amazon Cluster Service, and Mesosphere DC/OS. All of these orchestrators can solve the “what to run where” problem. We give it an instruction like “Run three copies of my webserver, I don’t care how,” and the orchestrator will launch three containers and report their status. Most orchestrators will also restart a container when it fails, notifying the humans that it has done so. (This allows the humans to, for instance, take action to make failures less likely in the future.)

Each orchestrator has strengths and weaknesses, and each is best for particular jobs. I’m not familiar with the needs of your organization, so I can’t presume to dictate which orchestrator is the right one for you. Walk into your IT department and say “Kubernetes? Docker Swarm?”, for example, and some bright-eyed engineer will talk your ear off for an hour explaining why one of these orchestrators is the right one for our organization. I completely agree — that is the right choice for you.

But for the sake of this post, let’s assume we choose Kubernetes. 😀 Kubernetes isn’t novel because it can pool machines together into a single cloud OS, nor is it novel in keeping containers running. But it is a novel and well accepted platform. Let’s dig in and see more.

Kubernetes Architecture

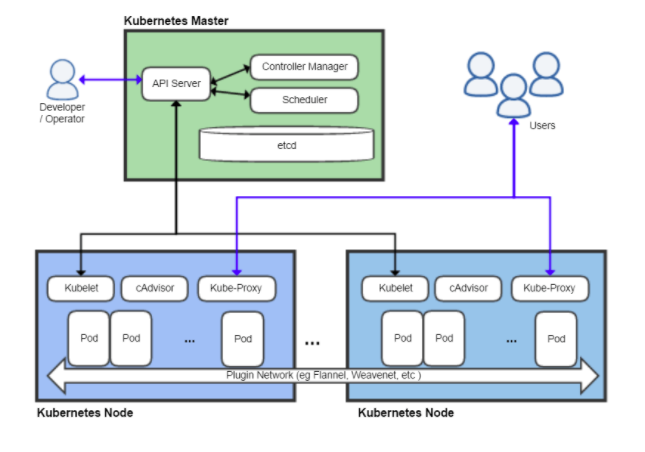

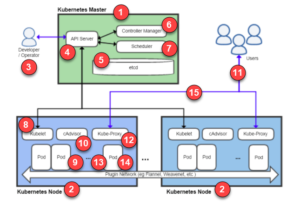

Most introductory tutorials for Kubernetes show a graphic like this, step away, and say, “So now that I’ve explained it to you…” <scratches head>. The first time I saw a diagram like this one, I too was incredibly confused. What are all the boxes and arrows? What does that mean to me and my app?

Note. The graphic above is from VitalFlux, which takes on the heavy lifting of explaining Kubernetes in more detail than we have provided here.

I’ll explain each of these pieces, and unless you’re going to go deep into the weeds, this is likely the first and last time you’ll need to worry about Kubernetes in this way. In an exceptionally oversimplified explanation, here’s what this diagram shows:

- The Control Plane are all the machines that manage the cluster; All the green boxes. (If this were an organization chart, it would be labeled “management.”) We can think of this as all the plumbing in the system. The work of your web properties and data stores is not run here. You’ll generally want a few machines doing this work — three or five or nine. In most cloud-provided k8s clusters, these machines are free.

- The Worker Nodes are all the machines doing work for you and your business; All the blue boxes. These machines run your web properties, back-end services, scheduled jobs, and data stores. (You may choose to store the actual data elsewhere, but the engine that runs the data store will run here.)

- As a developer or ops engineer, you’ll likely use kubectl, the command-line utility for Kubernetes, to start, stop, and change content in the cluster.

- kubectl connects to the kubernetes API server to give it instructions.

- The API server stores data in etcd, the kubernetes data store.

- The Controller Manager polls against the API, and notices a change in etcd.

- The Controller Manager directs the Scheduler to make a change to the environment, and the Scheduler picks a Worker Node (one of the blue boxes) to do the work.

- The Scheduler tells the Kubelet to make the necessary change. The Kubelet is responsible for the node (machine).

- The Kubelet fires up a Pod, and runs Docker commands to run the container.

- cAdvisor watches the running pods, reporting events back to the API that get stored in etcd.

- As a user visiting a website, the traffic comes in through the Internet, and through a load balancer, which chooses one of the worker nodes (machines) to run the content.

- The traffic is forwarded to Kube-Proxy.

- Kube-Proxy identifies which Pod should receive the traffic and directs it there.

- The Pod wraps the container, which processes the request, and returns the response.

- The response flows back to the user across the Kube-Proxy and the Load Balancer.

This is likely the first and last time you care about Kubernetes at this level. (The knee bone connected to the hip bone.) But as you look at the graphic and the flow of data, one thing stands out: Kubernetes is great for microservices because Kubernetes is microservices. Each one of these pieces is a container running inside the Kubernetes cluster. Each can be replaced with alternate implementations, either from the community, or things you build. Kubernetes is a great place for you to host your services because Kubernetes hosts its own services this way as well.

Conclusion

Kubernetes is the orchestrator for the modern web. Whether you’re running stateless website code or running a stateful data store, such as SingleStore, Kubernetes can scale to match your needs. As the emerging industry standard platform, it’s extensible, allowing one to replace portions of the cloud OS with custom pieces, from either open-source players or custom-built components. Kubernetes will automatically monitor your crucial business web properties, and can restart necessary services without intervention.

If you’re interested in trying some or all of this with SingleStore, you can try SingleStoreDB Self-Managed or Singlestore Helios for free today, or contact us to discuss SingleStore and Singlestore Helios in depth.