Last week at the Strata Data Conference in San Jose, I had the privilege of demonstrating SingleStore processing over a trillion rows per second on the latest Intel Skylake servers.

It’s well known that having an interactive response time of under a quarter of a second gives people incredible satisfaction. When you deliver response time that drops down to about a quarter of a second, results seem to be instantaneous to users.

But with large data sets and concurrency needs, giving all customers that level of speed can seem beyond reach. So developers sometimes take shortcuts, such as precomputing summary aggregates. That can lead to a rigid user experience where if you tweak your query a little, for example adding an extra grouping column, suddenly it runs orders of magnitude slower. And it also means your answers are not real time, i.e. not on the latest data.

SingleStore gives you the interactive response time your users want, on huge data sets, with concurrent access, without resorting to precomputing results.

Running at a Trillion Rows Per Second

SingleStoreDB Self-Managed 6, which shipped in late 2017, contains new technology for executing single-table group-by/aggregate queries on columnstore data incredibly fast. The implementation is based on these methods: (1) operations done directly on encoded (compressed) data in the columnstore, (2) compilation of queries to machine code, (3) vectorized execution, and (4) use of Intel AVX2 single instruction, multiple data (SIMD) enhancements. When the group-by columns are encoded with dictionary, integer value, or run-length encoding, SingleStore runs a one-table group-by/aggregate at rates exceeding three billion rows per second per core at its peak. The fewer the number of groups and the simpler the aggregate functions, the faster SingleStore goes.

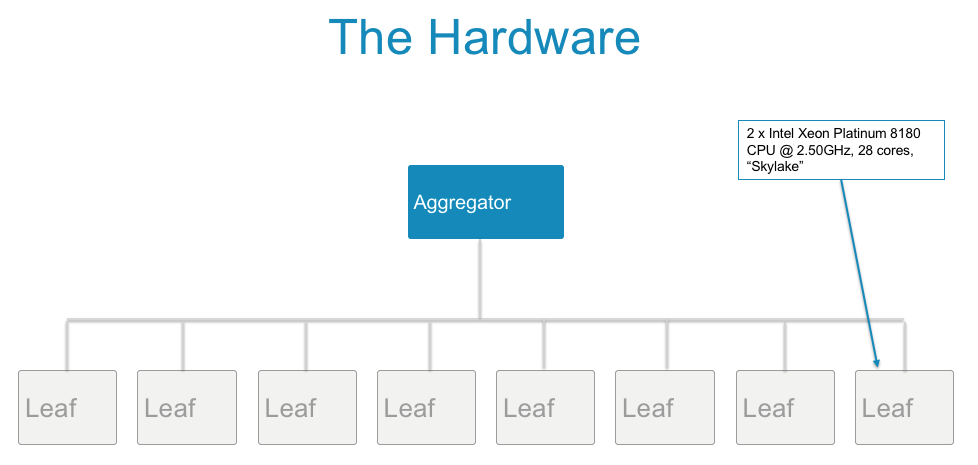

This incredible per-core speed gave us the idea to shoot for the trillion-rows-per-second mark. To accomplish this, with a realistic query, I wrote a data generator to build a data set that simulates stock trades on the NASDAQ. Then we talked to our partners at Intel, and they generously gave us access to servers in their lab with the latest Skylake processors. These machines have two Intel® Xeon® Platinum 8180 processors each, which have 28 cores, for a total of 56 cores per server. I created a SingleStore cluster with one aggregator node and eight leaf nodes, with one server for each node, as shown in Figure 1. This cluster had 2 * 28 * 8 = 448 total cores on the leaves — the most important number that determined the overall rows-per-second rate we could get.

Figure 1. The hardware arrangement used to break a trillion rows per second.

I installed SingleStore on this cluster with two SingleStore leaf nodes on each leaf server, with non-uniform memory access (NUMA) optimizations enabled, so each SingleStore leaf node software process would run on a dedicated Skylake chip. Then I created a database trades with one partition per core to get optimal scan performance. Once that was complete, I loaded billions of rows of data representing stock trades (really decades worth of trades) into a table called trade. The larger capitalization a stock is, the more trades it has. Here’s a tiny sample of the data:

memsql> select id, stock_symbol, shares, share_price, trade_time

from trade

limit 10;

+-------------+--------------+-----------+-------------+----------------------------+

| id | stock_symbol | shares | share_price | trade_time |

+-------------+--------------+-----------+-------------+----------------------------+

| 10183273878 | CXRX | 600.0000 | 22.0000 | 2018-03-08 08:50:57.000000 |

| 10184155113 | CXRX | 700.0000 | 31.0000 | 2018-03-08 08:50:57.000000 |

| 10183273871 | CXRX | 500.0000 | 8.0000 | 2018-03-08 07:50:57.000000 |

| 10185917724 | CXRX | 1000.0000 | 63.0000 | 2018-03-08 06:50:57.000000 |

| 10183273873 | CXRX | 1000.0000 | 74.0000 | 2018-03-08 04:50:57.000000 |

| 10183273874 | CXRX | 800.0000 | 96.0000 | 2018-03-08 10:50:57.000000 |

| 10183273865 | CXRX | 600.0000 | 82.0000 | 2018-03-08 06:50:57.000000 |

| 10183273876 | CXRX | 600.0000 | 40.0000 | 2018-03-08 05:50:57.000000 |

| 10183273877 | CXRX | 700.0000 | 47.0000 | 2018-03-08 05:50:57.000000 |

| 10183273869 | CXRX | 100.0000 | 15.0000 | 2018-03-08 05:50:57.000000 |

+-------------+--------------+-----------+-------------+----------------------------+

This resulted in how many rows? Check this out:

memsql> select format(count(*), 0) as c from trade;

+----------------+

| c |

+----------------+

| 57,756,221,440 |

+----------------+

That’s right, about 57.8 billion rows!

Then I ran this query to find the top 10 most traded stocks of all time:

select stock_symbol, count(*) as c

from trade

group by stock_symbol

order by c desc

limit 10;

And got this result:

+--------------+----------+

| stock_symbol | c |

+--------------+----------+

| GILD | 39321600 |

| AABA | 39190528 |

| KHC | 39190528 |

| AMZN | 39190528 |

| ASML | 39059456 |

| CHTR | 39059456 |

| TXN | 39059456 |

| FB | 39059456 |

| CELG | 39059456 |

| TIG | 39059456 |

+--------------+----------+

10 rows in set (0.05 sec)

10 rows in set (0.05 sec)

Yes, this query ran in 5 one-hundredths of a second! To get an accurate scan rate, we need some more significant figures though. So I wrote a stored procedure to run it 100 times in a loop:

memsql> call profile_query(100);

Query OK, 0 rows affected (4.51 sec)

This gives an average response time for the query of 0.0451 second, which yields the following scan rate:

memsql> select format(count(*)/0.0451, 0) as c from trade;

+-------------------+

| c |

+-------------------+

| 1,280,625,752,550 |

+-------------------+

Yes, that is 1.28 trillion rows per second!

We’re scanning and processing 2.86 billion rows per second per core, through the beauty of operations on encoded data, vectorization, and SIMD. We’re actually spending some time on every row, and not precalculating the result, to achieve this.

What does it mean?

The fact that we can break the trillion-row-per-second barrier with SingleStore on 448 cores worth of Intel’s latest chips is significant in a couple of ways. First, you can get interactive response time on mammoth data sets without precalculating results. This allows more flexible interaction from users, and encourages them to explore the data. It also enables real-time analytics. Second, it allows highly concurrent access by hundreds of users on smaller data sets, with all of them getting interactive response times. Again, these users will get the benefit of analytics on the latest data, not a precalculated summary snapshot. SingleStore enables all this through standard SQL that your developers already are trained to use.

Also, we achieved this on industry-standard hardware from Intel, not a special-purpose database machine. These are machines that your organization can afford to buy, or that you can rent from your favorite cloud provider, without zeroing out your bank account.

What could you do for your users with SingleStore on today’s Intel chips? Try us out, and watch below how we achieved a trillion rows per second.

Appendix: For you hardware geeks out there

If you’re craving more details about the Skylake chips we used, here’s the output of the Linux lscpu utility run on one of the servers making up the 9 total servers in the cluster:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 112

On-line CPU(s) list: 0-111

Thread(s) per core: 2

Core(s) per socket: 28

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Xeon(R) Platinum 8180 CPU @ 2.50GHz

Stepping: 4

CPU MHz: 2501.000

BogoMIPS: 4993.28

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 1024K

L3 cache: 39424K

NUMA node0 CPU(s): 0-27,56-83

NUMA node1 CPU(s): 28-55,84-111

The data was stored on SSDs, though that isn’t really significant since we ran the queries warm-start, so the data was already cached in memory. The network is 10-gigabit ethernet.