Elephants can’t jump, trot or gallop. But together, they can stampede — which is exactly what enterprises are doing to move away from Hadoop. This blog looks at how three industry leaders have embraced SingleStore as the successor to Hadoop, and the game-changing benefits they’ve received. Read on to learn how Kellogg’s, Comcast and a Tier 1 wealth management firm successfully “augmented or retired the elephant.”

Kellogg’s: Real-time visibility into supply chain profitability

Reducing a 24-hour ETL process to 43 minutes

From cereal to potato chips, Kellogg’s puts some of the world’s most popular packaged foods on grocery shelves every day. But its supply chain dashboards, powered by Hadoop and SAP Object Data Services, made it impossible for managers to get the fresh data necessary for daily profitability analyses. Hadoop’s batch data ingestion required an ETL process of about 24 hours, and interactive queries were painfully slow.

From batch ETL to real-time insights

Kellogg’s looked to SingleStore to replace Hadoop for improved speed-to-insight and concurrency. Its priority was twofold: real-time ingestion of data from multiple supply chain applications, and fast SQL capabilities to accelerate queries made through Kellogg’s Tableau data visualization platform.

Deploying SingleStore on AWS, with SingleStore Pipelines, Kellogg’s was able to continuously ingest data from AWS S3 buckets and Apache Kafka for up-to-date analysis and reporting. SingleStore reduced the ETL process from 24 hours to an average 43 minutes — a 30x improvement. The team then was able to bring in three years of archived data into SingleStore without increasing ETL timeframes, and subsequently was able to incorporate external data sources such as Twitter, as well.

Direct integration with Tableau

Finally, Kellogg’s was able to analyze customer logistics and profitability data daily, running Tableau visualizations directly on top of SingleStore rather than on a data extract. With SingleStore directly integrated with Tableau, Kellogg has maintained an 80x improvement on analytics performance while supporting hundreds of concurrent business users.

“We have to be able to provide business value on each and every project. Because every business user is requesting speed and the ability to move at an incredibly iterative pace, we need to be able to provide that and in-memory [SingleStore] allows us to do so.”

-- JR Cahill, Principal Architect for Global Analytics, Kellogg’s

Read the case study: “How Kellogg Reduced 24-Hour ETL to Minutes and Boosted BI Speed by 20x.”

Comcast: Analytic power to help customers in real time, at scale

Eliminating data bottlenecks to reduce truck rolls

With more than 18 million customer relationships for its video services, Comcast has built world-class automated equipment set-up capabilities, allowing most customers to quickly get started with their favorite entertainment. But when problems arise, customers are quick to call the support desk to ask for help. If issues can’t be resolved through a phone call, a frustrated customer must wait for an appointment with a Comcast technician – in industry parlance, a dreaded “truck roll.”

Hadoop can’t power fast analytics at scale

To proactively address installation challenges, Comcast wanted to develop a system that would gather device telemetry data from across its vast network, alerting technical service reps to specific customers’ installation issues as they occurred, in real time. In that moment a Comcast rep could proactively reach out to a customer, ready to assist.

With an existing analytics system powered by Hadoop, Comcast could not capture telemetry data fast enough, at scale, from cable modems being installed on its network. The Hadoop system was built for after-the-fact analysis; and in order to help customers in real-time, it needed to capture and analyze 300,000 network events per second, an insurmountable bottleneck.

SingleStore delivers enabling technology

Comcast needed a modern data platform to support the streaming data ingestion and the high-concurrency, low-latency queries necessary for real-time anomaly detection. TCO and scalability were concerns, as well; data growth had to be optimized on commodity infrastructure. The telecommunications giant chose SingleStore to improve its ability to respond fast, with answers in real time.

Comcast augmented its Hadoop environment with SingleStore to enable fast analytics on streaming data. SingleStore handily met Comcast’s 300,000 event-per-second SLA, allowing technicians to identify customers needing help. This approach dramatically reduces truck rolls and improves customer satisfaction ratings; for example, in its Q2 2021 earnings call with investors, Comcast reported a 22% reduction in truck rolls despite an overall five percent increase in its customer base.

Read the case study: “Real-Time Stream Processing Architecture With Hadoop and SingleStore.”

Tier 1 Wealth Management Firm: Building a dashboard that delivers

Supporting the complex portfolio analyses of 40,000 concurrent users

The high net-worth clients of the wealth management division of a Tier 1 investment firm mirror the institution’s outsize reputation: smart, quick-thinking and very demanding. The wealth management firm was under pressure to provide fast, interactive experiences on portfolio dashboards, especially during critical market events.

Unfortunately, the firm’s legacy data architecture, based on Hadoop, was routinely stressed by fast-changing market events. Data latency was compounded by tens of thousands of users simultaneously accessing the system. The firm was further constrained by batch ETL processes to ingest data, rendering real-time insights impossible. Worse still, during batch uploads, client queries were frequently locked out.

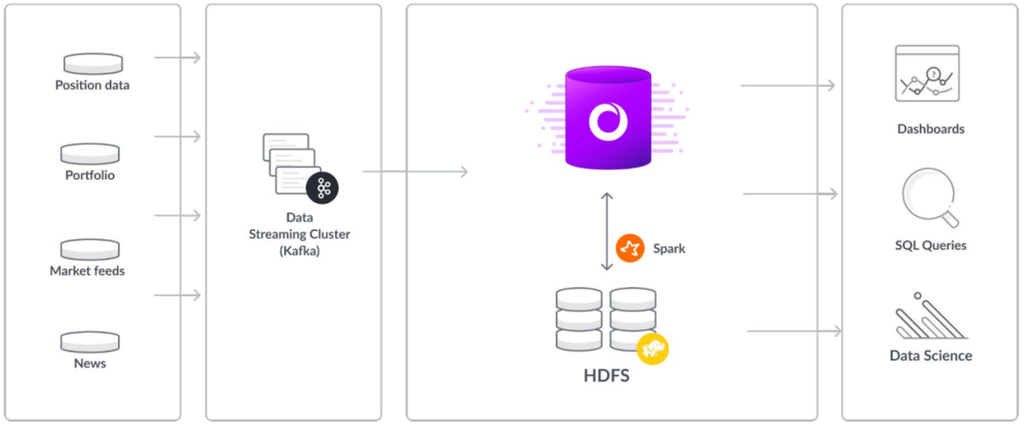

Augmenting Hadoop with a fast layer of SingleStore

To vanquish the lagging performance of the dashboards, which were often accessed by 40,000 concurrent users, the Tier 1 wealth management firm augmented its legacy Hadoop/HDFS environment with SingleStore. As pictured in Figure 2, SingleStore was configured as the fast layer of the architecture, with Hadoop/HDFS a data lake for long-term data storage.

Meeting expectations for instant answers

With SingleStore now serving fast analytics, the dashboards and other client-facing applications deliver instant query responses in 10 to 20 milliseconds, with zero data latencies. The system supports 40,000 concurrent users with ease, delivering millisecond response times even when market events cause spikes in usage.

Furthermore, SingleStore’s streaming ingest capabilities make it possible for the Tier 1 firm to provide five years of historical data instead of one. Applications and user queries can draw upon five times as much data at hand for deeper portfolio and market analysis.

A Tier 1 wealth management firm uses SingleStore to transform its legacy Hadoop environment into a tightly coupled data lake.

Read the case study: “Modernizing the Wealth Management Experience to Drive Competitive Advantage.”

If you’re considering Hadoop exit strategies and alternatives, SingleStore is one of the best options. Take the first step in joining Kellogg’s, Comcast, a Tier 1 wealth management firm and many other industry leaders.

Watch the webinar: “Augmenting or Replacing Hadoop With SingleStore.”