In today's data-driven world, the ability to efficiently process and analyze large volumes of information is crucial for making informed business decisions.

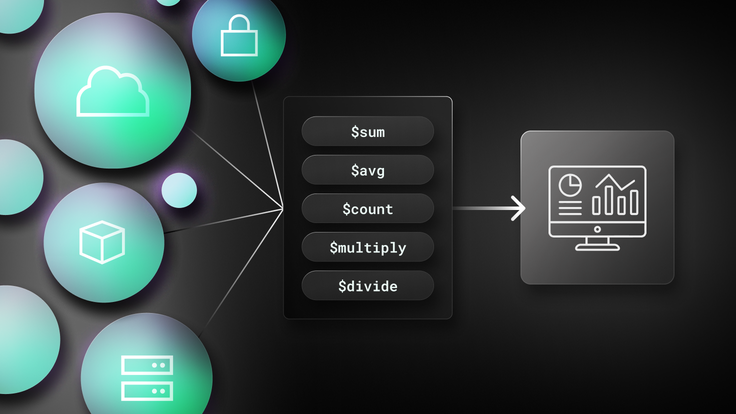

MongoDB's aggregation operators offer powerful tools to transform raw data into meaningful insights, enabling developers and analysts to perform complex calculations, generate summaries and manipulate data with ease. Whether you're building real-time dashboards, conducting in-depth analytics or optimizing data workflows, mastering these operators is essential. This article explores the key concepts and practical use cases of MongoDB's aggregation operators, providing you with the knowledge to harness their full potential in your projects.

Understanding aggregation operators

What are aggregation operators?

Aggregation operators are tools used to perform calculations on data, manipulate values and generate summarized results from various sources within a MongoDB database.

They combine individual data points to produce meaningful insights, making them essential in large data processing workflows. Aggregation operators work within MongoDB's aggregation framework to help you analyze and transform your data.

Aggregation operators are crucial for information fusion, which is the process of merging multiple data points or sources to derive a unified, useful conclusion.

For example, in data analytics, aggregation operators help summarize details including averages, totals, counts and other computed values that give meaning to raw data. They function similarly to the formulas in spreadsheet tools used to aggregate data from multiple cells into a, single value or outcome.

Common but distinct types of aggregation operators include arithmetic operators (e.g., $add, $avg, $sum), probabilistic operators, distance operators and other specialized forms like linguistic and intuitionistic aggregation operators, which handle fuzzy or imprecise data values.

Practical use cases of aggregation operators

Calculating totals and averages

Using operators like $sum and $avg, you can calculate total sales, average customer ratings or overall engagement metrics.

1collection.aggregate(2 Arrays.asList(3 Aggregates.group(4 null,5 Accumulators.sum("totalSales", "$sales"),6 Accumulators.avg("avgRating", "$rating")7 )8 )9).forEach(doc -> System.out.println(doc.toJson()));

Counting documents

Operators like $count allow you to determine the number of documents meeting specific criteria.

1collection.aggregate(2 Arrays.asList(3 Aggregates.match(Filters.eq("status", "active")),4 Aggregates.count("activeCount")5 )6).forEach(doc -> System.out.println(doc.toJson()));

Field calculations

Aggregation operators can calculate new fields dynamically. For example, you can use $multiply to compute product prices with discounts, or $divide to determine ratios between different values.

1collection.aggregate(2 Arrays.asList(3 Aggregates.project(4 Projections.fields(5 � Projections.computed(6 "discountedPrice",7 new Document("$multiply", Arrays.asList("$price", 0.9))8 )9 )10 )11 )12).forEach(doc -> System.out.println(doc.toJson()));

Building dashboards

Aggregation operators form the backbone of many real-time dashboards where aggregated metrics like sales figures, customer counts and product inventories need to be continuously updated.

Aggregation pipeline

Overview of the aggregation pipeline

The aggregation pipeline in MongoDB is a powerful framework for processing data. It allows you to perform multiple transformations in sequence, making it particularly useful for data analysis and reporting. The aggregation pipeline is designed to mimic the structure of Unix-style command pipelines, where data flows from one stage to another, each pipeline stage applying a specific transformation.

The pipeline processes documents as they move through different stages, like filtering, projecting, grouping, sorting and reshaping data. Each stage of the pipeline executes a specific operation that contributes to the transformation of the original data set, with the final output being defined as a transformed and aggregated result that fits the query's purpose.

Key stages of an aggregation pipeline

$match

Filters documents to pass only those that meet the specified criteria to the next stage. This stage is similar to a find() query, and is often used as an initial step to reduce the volume of data to be processed.

1collection.aggregate(2 Arrays.asList(3 Aggregates.match(4 Filters.eq("status", "completed")5 )6 )7).forEach(doc -> System.out.println(doc.toJson()));

$group

Groups documents by a specified field and performs aggregation operations, like $sum or $avg, on the grouped data. This stage is useful for generating summary information, like total sales by region.

1collection.aggregate(2 Arrays.asList(3 Aggregates.group(4 "$region",5 Accumulators.sum("totalSales", "$sales")6 )7 )8).forEach(doc -> System.out.println(doc.toJson()));

$project

Shapes the documents by including, excluding or adding new fields. This is useful for controlling which data points are visible in the output and for creating computed fields.

1collection.aggregate(2 Arrays.asList(3 Aggregates.project(4 Projections.fields(5 Projections.include("name", "price"),6 Projections.computed(7 "priceWithTax",8 new Document("$multiply", Arrays.asList("$price", 1.1))9 )10 )11 )12 )13).forEach(doc -> System.out.println(doc.toJson()));

$sort

Sorts documents based on a specified field or fields. Sorting helps present the data in a logical order, like arranging sales data from highest to lowest.

1collection.aggregate(2 Arrays.asList(3 Aggregates.sort(4 Sorts.descending("totalSales")5 )6 )7).forEach(doc -> System.out.println(doc.toJson()));

$limit and $skip

These stages allow you to paginate results by limiting the number of documents or skipping a specific number of documents.

1collection.aggregate(2 Arrays.asList(3 Aggregates.limit(10),4 Aggregates.skip(5)5 )6).forEach(doc -> System.out.println(doc.toJson()));

$lookup

This performs a left outer join with another collection, allowing data from different collections to be combined in a single pipeline.

1collection.aggregate(2 Arrays.asList(3 Aggregates.lookup(4 "orders", // Foreign collection5 "customerId", // Local field6 "customerId", // Foreign field7 "customerOrders" // Output array field8 )9 )10).forEach(doc -> System.out.println(doc.toJson()));

$unwind

This deconstructs an array field from the input documents, outputting a document for each element. This is especially useful when you need to manipulate or aggregate individual items in an array.

1collection.aggregate(2 Arrays.asList(3 Aggregates.unwind("$orders")4 )5).forEach(doc -> System.out.println(doc.toJson()));

These stages can be chained together to perform complex data transformations. For example, you might first filter documents with $match, then group them using $group and finally shape the results with $project to produce a report.

Binary aggregation operators

Binary aggregation operators perform operations on two fields or values. They are essential in performing a specified number of mathematical and logical operations within an aggregation pipeline.

Arithmetic operators

$add

This operator adds values together. For example, you can use $add to compute the total price, including a product's base price and applicable tax.

1collection.aggregate(2 Arrays.asList(3 Aggregates.project(4 Projections.fields(5 Projections.computed(6 "totalPrice",7 new Document("$add", Arrays.asList("$basePrice", "$tax"))8 )9 )10 )11 )12).forEach(doc -> System.out.println(doc.toJson()));

$subtract

This subtracts one value from another, and is useful when calculating the difference between two dates or quantities.

1collection.aggregate(2 Arrays.asList(3 Aggregates.project(4 Projections.fields(5 Projections.computed(6 "priceDifference",7 new Document(8 "$subtract",9 Arrays.asList("$retailPrice", "$discountedPrice")10 )11 )12 )13 )14 )15).forEach(doc -> System.out.println(doc.toJson()));

$multiply and $divide

These operators are used for multiplication and division operations, like calculating a discounted price ($multiply) or determining ratios ($divide).

1collection.aggregate(2 Arrays.asList(3 Aggregates.project(4 Projections.fields(5 Projections.computed(6 "discountedPrice",7 new Document(8 "$multiply",9 Arrays.asList("$price", 0.8)10 )11 ),12 Projections.computed(13 "ratio",14 new Document(15 "$divide",16 Arrays.asList("$valueA", "$valueB")17 )18 )19 )20 )21 )22).forEach(doc -> System.out.println(doc.toJson()));

Comparison operators

$eq (equal)

This checks if two values are equal, and is often used for filtering documents that match specific criteria.

1collection.aggregate(2 Arrays.asList(3 Aggregates.match(4 Filters.expr(5 new Document(6 "$eq",7 Arrays.asList("$status", "completed")8 )9 )10 )11 )12).forEach(doc -> System.out.println(doc.toJson()));

$gt (greater than) and $lt (less than)

These operators compare two values and return a boolean result. They are particularly useful when filtering data based on numerical thresholds, like retrieving products priced above a certain value.

1collection.aggregate(2 Arrays.asList(3 Aggregates.match(4 Filters.expr(5 new Document(6 "$gt",7 Arrays.asList("$price", 100)8 )9 )10 )11 )12).forEach(doc -> System.out.println(doc.toJson()));

$ne (not equal)

This returns true if the values do not match, and can be used to exclude specific data points.

1collection.aggregate(2 Arrays.asList(3 Aggregates.match(4 Filters.expr(5 new Document(6 "$ne",7 Arrays.asList("$status", "inactive")8 )9 )10 )11 )12).forEach(doc -> System.out.println(doc.toJson()));

Comparison and arithmetic operators enable you to perform calculations and conditional checks within the aggregation pipeline, allowing for more dynamic data processing functions.

Vector matching and group modifiers

In advanced aggregation scenarios, it may be necessary to match data across different sets of parameters or apply grouping rules to combine data intelligently. Vector matching and group modifiers play an important role in these situations.

Vector matching

In aggregation, vector matching keywords like group_left and group_right allow you to align data points between different data sets with varying label sets. For instance, if you're comparing sales data from two different regions, vector matching ensures only relevant fields from each data set are compared or combined.

Group modifiers

Keywords like "by" and "without" help define how data should be grouped when there are multiple labels or categories. They enable the aggregation pipeline to perform many-to-one or one-to-many matches effectively.

These matching and modifying tools enhance the versatility and functionality of MongoDB's aggregation framework by providing precise control over how data points relate to each other — especially when dealing with multidimensional datasets.

Working with input documents

The aggregation pipeline processes input documents and calculates them based on specified expressions, which can range from simple operations like filtering to more advanced calculations and transformations.

$expr

This operator allows the use of aggregation expressions in the $match stage, making it possible to filter documents based on computed values. For instance, $expr can be used to compare two fields within a document, such as filtering products where the price field is greater than the cost field.

1collection.aggregate(2 Arrays.asList(3 Aggregates.match(4 Filters.expr(5 new Document(6 "$gt",7 Arrays.asList("$price", "$cost")8 )9 )10 )11 )12).forEach(doc -> System.out.println(doc.toJson()));

Array element operations

Aggregation can also manipulate array elements using operators like $unwind, which deconstructs arrays and processes each element individually. You can use $filter to select specific elements from an array field based on given criteria.

1collection.aggregate(2 Arrays.asList(3 Aggregates.unwind("$categories"),4 Aggregates.match(5 Filters.eq("categories", "Bakery")6 )7 )8).forEach(doc -> System.out.println(doc.toJson()));

Field paths

Aggregation expressions often reference field paths, which point to specific fields within documents. For example, a field path like contact.phone accesses nested fields, making it possible to work directly with embedded document data.

1collection.aggregate(2 Arrays.asList(3 Aggregates.project(4 Projections.fields(5 Projections.include("contact.phone")6 )7 )8 )9).forEach(doc -> System.out.println(doc.toJson()));

The ability to work with input documents flexibly is a key strength of MongoDB's aggregation pipeline, allowing users to extract, reshape and analyze data with precision.

Advanced aggregation topics

Optimizing aggregation pipelines

Optimization is an essential aspect of building efficient aggregation pipelines — especially when dealing with large collections. Here are key techniques to optimize aggregation performance:

Indexing

The $match stage can leverage indexes to efficiently filter documents. Always ensure that fields used in $match conditions are properly indexed to speed up query performance.

1collection.createIndex(new Document("status", 1));2 3collection.aggregate(4 Arrays.asList(5 Aggregates.match(6 Filters.eq("status", "active")7 )8 )9).forEach(doc -> System.out.println(doc.toJson()));

Minimize stages

The fewer stages in your pipeline, the better. Each stage adds computational overhead, so combining multiple operations into a single stage can often improve performance. For example, using $group early in the pipeline to reduce data volume before performing additional operations can significantly improve speed.

Use projection early

Using $project early in the pipeline to include only necessary fields can reduce the amount of data processed by later stages, saving memory and CPU resources.

Allow disk usage

MongoDB has a memory limit of 100 MB for aggregation operations. If a pipeline exceeds this limit, use the allowDiskUse: true option to enable disk usage and prevent out-of-memory errors.

1collection.aggregate(2 Arrays.asList(3 Aggregates.sort(4 Sorts.ascending("price")5 )6 )7).allowDiskUse(true)8 .forEach(doc -> System.out.println(doc.toJson()));

$merge for data combination

The $merge stage is useful when you need to combine data from multiple collections or produce output for further processing. By merging results back into a collection, you can effectively materialize intermediate states that can be used by other processes.

1collection.aggregate(2 Arrays.asList(3 Aggregates.group(4 "$region",5 Accumulators.sum("totalSales", "$sales")6 ),7 Aggregates.merge("sales_summary")8 )9).forEach(doc -> System.out.println(doc.toJson()));

Caching and memory management

Cache results

When possible, store frequently used aggregation results in a separate collection. This avoids recalculating the same data repeatedly and reduces the load on your primary data.

Memory management

Be mindful of memory consumption, especially when working with stages like $sort that can consume significant resources. The allowDiskUse option allows stages to write to temporary files on disk, preventing memory overload.

Best practices and troubleshooting

Common pitfalls and troubleshooting techniques

Developing efficient aggregation pipelines requires awareness of common pitfalls that can hinder performance or accuracy. Here are some common issues and their solutions:

Inefficient operators

Using operators like $unwind without careful consideration can lead to a significant increase in the number of documents being processed, which can slow down the pipeline. Always use $unwind with specific filtering to ensure that only relevant documents are processed.

Over-reliance on $lookup

The $lookup stage, which performs a join-like operation between collections, can be a major bottleneck if not used efficiently. Ensure that fields involved in $lookup are indexed, and try to minimize the number of documents being joined.

Large output documents

Aggregation stages should not produce documents that exceed the 16 MB BSON size limit. Use $group and $project carefully to control the size of the output documents.

Using "explain" and "hint" operators

$explain

Use the $explain operator to understand the execution plan of an aggregation pipeline. $explain provides details on how MongoDB will execute each stage, helping identify inefficient stages or operations.

1Document explanation = collection.aggregate(2 Arrays.asList(3 Aggregates.match(4 Filters.eq("categories", "Bakery")5 ),6 Aggregates.group(7 "$stars",8 Accumulators.sum("count", 1)9 )10 )11).explain(ExplainVerbosity.EXECUTION_STATS);12 13System.out.println(explanation.toJson());

$hint

When you know which index is best for your query, you can use the $hint operator to instruct MongoDB to use that index during the aggregation. This can drastically reduce query times if MongoDB’s query planner does not choose the optimal index by default.

1collection.aggregate(2 Arrays.asList(3 Aggregates.match(4 Filters.eq("status", "completed")5 )6 )7).hint(new Document("status", 1)).forEach(doc -> 8System.out.println(doc.toJson()));

$maxTimeMS

Use $maxTimeMS to set a time limit for an aggregation operation. If the pipeline takes longer than expected, this option can prevent excessive resource consumption by stopping the execution.

1collection.aggregate(2 Arrays.asList(3 Aggregates.match(4 Filters.eq("status", "active")5 )6 )7).maxTime(1000L, TimeUnit.MILLISECONDS)8 .forEach(doc -> System.out.println(doc.toJson()));

Leveraging SingleStore Kai™ with MongoDB aggregation

While MongoDB's aggregation pipeline is powerful, combining it specifically with SingleStore Kai™️ can further optimize operations, especially for scenarios involving both transactional and analytical workloads.

Unified workloads

SingleStore Kai is an API for 100x faster operations on MongoDB, enabling you to run both transactional and analytical queries on the same data set without requiring major code changes. You can use SingleStore Kai for high-speed, real-time data aggregation while leveraging MongoDB for flexible document storage.

Interoperability and enhanced performance

SingleStore Kai allows applications built on MongoDB to benefit from enhanced performance without any query transformations. By simply changing the endpoint to SingleStore Kai, users can improve aggregation operations like $count, $lookup and $group with greater efficiency, while still utilizing MongoDB's aggregation syntax.

Try SingleStore Kai today

Aggregation operators in MongoDB are essential tools for processing and analyzing data from multiple sources. By using these and other operators more effectively, you can derive insights that go beyond simple data retrieval.

The aggregation pipeline offers a flexible way to perform a series of transformations on the same set of your data, from basic filtering and grouping to advanced operations like joins and array manipulations. Mastering its key stages and understanding how to chain them together allows developers to create sophisticated data processing workflows that address various use cases, including generating business metrics, producing reports and enriching data.

Optimization plays a vital role in ensuring aggregation pipelines run efficiently, especially for large data sets. Techniques like proper indexing, reducing the total number of stages and using the allowDiskUse option can enhance performance. Leveraging SingleStore with MongoDB aggregation further optimizes operations for scenarios requiring both real-time transactional performance and complex analytical workloads.

Finally, understanding best practices and troubleshooting methods is crucial for building reliable aggregation solutions. By leveraging the $explain, $hint and $maxTimeMS operators, developers can optimize pipeline performance, avoid common pitfalls and build robust systems that meet both functional and performance requirements.

Frequently Asked Questions