Image Matching with SQL

Notebook

Note

This notebook can be run on a Free Starter Workspace. To create a Free Starter Workspace navigate to Start using the left nav. You can also use your existing Standard or Premium workspace with this Notebook.

SingleStoreDB can supercharge your apps with AI!

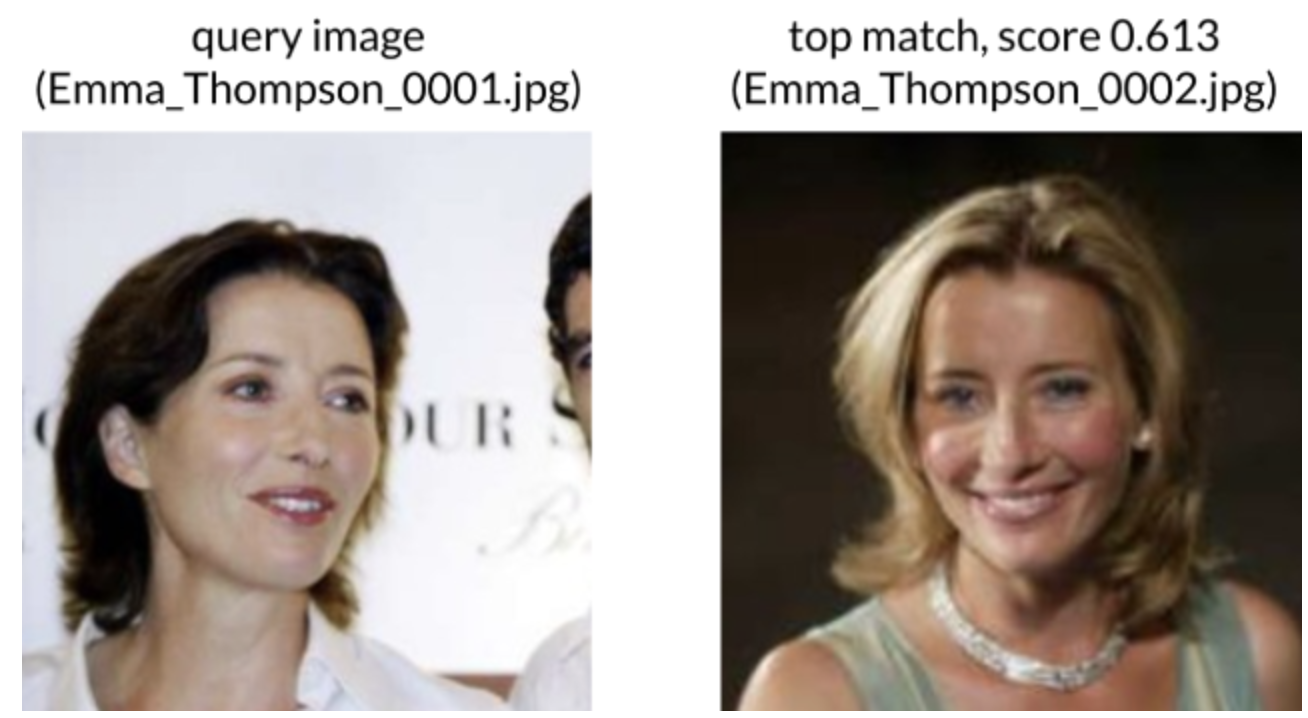

In this notebook, we’ll demonstrate how we use the dot_product function (for cosine similarity) to find a matching image of a celebrity from among 7 thousand records in just 3 milliseconds!

Efficient retrieval of high-dimensional vectors and handling of large-scale vector similarity matching workloads are made possible by SingleStore’s distributed architecture and efficient low-level execution. SingleStoreDB powers many AI applications including face matching, product photo matching, object recognition, text similarity matching, and sentiment analysis.

1. Create a workspace in your workspace group

S-00 is sufficient.

Action Required

If you have a Free Starter Workspace deployed already, select the database from drop-down menu at the top of this notebook. It updates the connection_url to connect to that database.

2. Create a Database named image_recognition

The code below will drop the current image_recognition database and create a fresh one.

In [1]:

1shared_tier_check = %sql show variables like 'is_shared_tier'2if not shared_tier_check or shared_tier_check[0][1] == 'OFF':3 %sql DROP DATABASE IF EXISTS image_recognition;4 %sql CREATE DATABASE image_recognition;

Action Required

Make sure to select the image_recognition database from the drop-down menu at the top of this notebook. It updates the connection_url which is used by the %%sql magic command and SQLAlchemy to make connections to the selected database.

3. Install and import the following libraries

This will take approximately 40 seconds. We are using the --quiet option of pip here to keep

the log messages from filling the output. You can remove that option if you want to see

the installation process.

You may see messages printed about not being able to find cuda drivers or TensorRT. These can be ignored.

In [2]:

1!pip3 install boto3 matplotlib tensorflow opencv-python-headless --quiet2 3import json4import os5import random6import urllib.request7 8import boto39import cv210import botocore.exceptions11import ipywidgets as widgets12import tensorflow.compat.v1 as tf13import matplotlib.pyplot as plt14import numpy as np15import pandas as pd16import requests17import singlestoredb as s218import tensorflow.compat.v1 as tf19from botocore import UNSIGNED20from botocore.client import Config21 22tf.compat.v1.logging.set_verbosity(tf.compat.v1.logging.ERROR)23tf.disable_v2_behavior()

4. Create a table of images of people

The table will contain two columns: 1) the filename containing the image and 2) the vector embedding of the image as a blob containing an array of 32-bit floats.

In [3]:

1%%sql2CREATE TABLE people /* Creating table for sample data. */(3 filename VARCHAR(255),4 vector BLOB,5 SHARD(filename)6);

5. Import our sample dataset into the table

This dataset has 7000 vector embeddings of celebrities!

Note that we are using the converters= parameter of pd.read_csv to parse the text as a JSON array and convert it

to a numpy array for the resulting DataFrame column.

In [4]:

1url = 'https://raw.githubusercontent.com/singlestore-labs/singlestoredb-samples/main/' + \2 'Tutorials/Face%20matching/celebrity_data.sql'

In [5]:

1def json_to_numpy_array(x: str | None) -> np.ndarray | None:2 """Convert JSON array string to numpy array."""3 return np.array(json.loads(x), dtype='f4') if x else None4 5 6# Read data into DataFrame7df = pd.read_csv(url, sep='"', usecols=[1, 3], names=['filename', 'vector'],8 converters=dict(vector=json_to_numpy_array))9 10# Create database connection11conn = s2.create_engine().connect()12 13# Upload DataFrame14df.to_sql('people', con=conn, index=False, if_exists='append')

6. Run our image matching algorithm using just 2 lines of SQL

In this example, we use an image of Adam Sandler and find the 5 closest images in our database to it. We use the dot_product function to measure cosine_similarity of each vector in the database to the input image.

In [6]:

1%%sql2SET @v = (SELECT vector FROM people WHERE filename = "Adam_Sandler/Adam_Sandler_0003.jpg");3SELECT filename, DOT_PRODUCT(vector, @v) AS score FROM people ORDER BY score DESC LIMIT 5;

7. Pick an image of a celebrity and see which images matched closest to it!

Run the code cell

Pick a celebrity picture

Wait for the match!

In [7]:

1s3 = boto3.resource('s3', region_name='us-east-1', config=Config(signature_version=UNSIGNED))2bucket = s3.Bucket('studiotutorials')3prefix = 'face_matching/'4 5peoplenames = %sql SELECT filename FROM people ORDER BY filename;6 7names = [x[0] for x in peoplenames]8 9out = widgets.Output(layout={'border': '1px solid black'})10 11def on_value_change(change: widgets.Output) -> None:12 """Handle a value change event on a drop-down menu."""13 with out:14 out.clear_output();15 selected_name = change.new16 countdb = %sql SELECT COUNT(*) FROM people WHERE filename = '{{selected_name}}';17 18 if int(countdb[-1][0]) > 0:19 %sql SET @v = (SELECT vector FROM people WHERE filename = '{{selected_name}}');20 result = %sql SELECT filename, DOT_PRODUCT(vector, @v) AS score FROM people ORDER BY score DESC LIMIT 5;21 original = "original.jpg"22 images = []23 matches = []24 try:25 bucket.download_file(prefix + selected_name, original)26 images.append(original)27 except botocore.exceptions.ClientError as e:28 if e.response['Error']['Code'] == "404":29 bucket.download_file(prefix + "error.jpg", original)30 else:31 raise32 cnt = 033 for res in result:34 print(res)35 temp_file = "match" + str(cnt) + ".jpg"36 images.append(temp_file)37 matches.append(res[1])38 try:39 bucket.download_file(prefix + res[0], temp_file)40 except botocore.exceptions.ClientError as e:41 if e.response['Error']['Code'] == "404":42 bucket.download_file(prefix + "error.jpg", temp_file)43 else:44 raise45 cnt += 146 fig, axes = plt.subplots(nrows=1, ncols=6, figsize=(40, 40))47 for i in range(6):48 axes[i].imshow(plt.imread(images[i]))49 axes[i].set_xticks([])50 axes[i].set_yticks([])51 axes[i].set_xlabel('')52 axes[i].set_ylabel('')53 if i == 0:54 axes[i].set_title("Original Image", fontsize=14)55 else:56 axes[i].set_title("Match " + str(i) + ". Score: " + str(matches[i-1]), fontsize=14)57 plt.show()58 else:59 print("No match for this image as it was not inserted into the People Table")60 61dropdown = widgets.Dropdown(62 options=names,63 description='Select an Image:',64 placeholder='Select an Image!',65 style={'description_width': 'initial'},66 layout={'width': 'max-content'}67)68 69display(dropdown)70dropdown.observe(on_value_change, names='value')71display(out)

8. See which celebrity you look most like!

In this step, you'll need to upload a picture of yourself. Note that your image MUST be at least 160x160 pixels. Head-shots and zoomed-in photos work better as we don't preprocess the image to just isolate the facial context! We only have 7,000 pictures so matching might be limited.

Run the code cell

Upload your picture

Wait for the match!

A low score for matching is less than 0.6.

In [8]:

1def prewhiten(x: np.ndarray) -> np.ndarray:2 """Prewhiten image data."""3 mean = np.mean(x)4 std = np.std(x)5 std_adj = np.maximum(std, 1.0 / np.sqrt(x.size))6 y = np.multiply(np.subtract(x, mean), 1 / std_adj)7 return y8 9 10def crop(image: np.ndarray, random_crop: bool, image_size: int) -> np.ndarray:11 """Crop an image to a given size."""12 if image.shape[1] > image_size:13 sz1 = int(image.shape[1] // 2)14 sz2 = int(image_size // 2)15 if random_crop:16 diff = sz1 - sz217 (h, v) = (np.random.randint(-diff, diff + 1), np.random.randint(-diff, diff + 1))18 else:19 (h, v) = (0, 0)20 image = image[(sz1 - sz2 + v):(sz1 + sz2 + v), (sz1 - sz2 + h):(sz1 + sz2 + h), :]21 return image22 23 24def flip(image: np.ndarray, random_flip: bool) -> np.ndarray:25 """Flip the image data left-to-right."""26 if random_flip and np.random.choice([True, False]):27 image = np.fliplr(image)28 return image29 30 31def load_data(32 image_paths: list[str],33 do_random_crop: bool,34 do_random_flip: bool,35 image_size: int,36 do_prewhiten: bool=True,37) -> np.ndarray:38 nrof_samples = len(image_paths)39 images = np.zeros((nrof_samples, image_size, image_size, 3))40 for i in range(nrof_samples):41 img = cv2.imread(image_paths[i])42 if do_prewhiten:43 img = prewhiten(img)44 img = crop(img, do_random_crop, image_size)45 img = flip(img, do_random_flip)46 images[i, :, :, :] = img47 return images48 49 50new_out= widgets.Output(layout={'border': '1px solid black'})51 52s3 = boto3.resource('s3', region_name='us-east-1', config=Config(signature_version=UNSIGNED))53bucket = s3.Bucket('studiotutorials')54prefix = 'face_matching/'55names=[]56 57local_folder = './face_matching_models'58if not os.path.exists(local_folder):59 os.makedirs(local_folder)60 61s3 = boto3.client('s3', region_name='us-east-1', config=Config(signature_version=UNSIGNED))62s3.download_file('studiotutorials', 'face_matching_models/20170512-110547.pb',63 os.path.join(local_folder, '20170512-110547.pb'))64pb_file_path = './face_matching_models/20170512-110547.pb'65 66# Load the .pb file into a graph67with tf.io.gfile.GFile(pb_file_path, 'rb') as f:68 graph_def = tf.compat.v1.GraphDef()69 graph_def.ParseFromString(f.read())70 71 72def handle_upload(change: widgets.Output) -> None:73 with new_out:74 new_out.clear_output();75 new_file_name=''76 77 # Get the uploaded file78 uploaded_file = change.new79 if uploaded_file[0]['name'].lower().endswith(('.png', '.jpg', '.jpeg')):80 # Do something with the uploaded file81 file_name = uploaded_file[0]['name']82 random_number = random.randint(1, 100000000)83 new_file_name = f"{file_name.split('.')[0]}_{random_number}.{file_name.split('.')[-1]}"84 file_content = uploaded_file[0]['content']85 with open(new_file_name, 'wb') as f:86 f.write(file_content)87 with tf.compat.v1.Session() as sess:88 sess.graph.as_default()89 tf.import_graph_def(graph_def, name='')90 images_placeholder = sess.graph.get_tensor_by_name("input:0")91 embeddings = sess.graph.get_tensor_by_name("embeddings:0")92 phase_train_placeholder = tf.get_default_graph().get_tensor_by_name("phase_train:0")93 phase_train = False94 img = load_data([new_file_name], False, False, 160)95 feed_dict = {96 images_placeholder: img,97 phase_train_placeholder: phase_train,98 }99 embeddings_ = sess.run(embeddings, feed_dict=feed_dict)100 embeddings_list = [float(x) for x in embeddings_[0]]101 embeddings_json = json.dumps(embeddings_list)102 %sql insert into people values('{{new_file_name}}', json_array_pack_f32("{{embddings_json}}"));103 else:104 print("Upload a .png, .jpg or .jpeg image")105 106 num_matches = 5107 countdb = %sql SELECT COUNT(*) FROM people WHERE filename = '{{new_file_name}}';108 109 if int(countdb[-1][0]) > 0:110 %sql SET @v = (SELECT vector FROM people WHERE filename = '{{new_file_name}}');111 result = %sql SELECT filename, DOT_PRODUCT(vector, @v) AS score FROM people ORDER BY score DESC LIMIT 5;112 images = []113 matches = []114 images.append(new_file_name)115 cnt = 0116 for res in result:117 print(res)118 if (cnt == 0):119 temp_file = new_file_name120 else:121 temp_file = "match" + str(cnt) + ".jpg"122 try:123 bucket.download_file(prefix + res[0], temp_file)124 except botocore.exceptions.ClientError as e:125 if e.response['Error']['Code'] == "404":126 bucket.download_file(prefix + "error.jpg", temp_file)127 else:128 raise129 images.append(temp_file)130 matches.append(res[1])131 cnt += 1132 fig, axes = plt.subplots(nrows=1, ncols=num_matches+1, figsize=(40, 40))133 %sql DELETE FROM people WHERE filename = '{{new_file_name}}';134 for i in range(num_matches+1):135 axes[i].imshow(plt.imread(images[i]))136 axes[i].set_xticks([])137 axes[i].set_yticks([])138 axes[i].set_xlabel('')139 axes[i].set_ylabel('')140 if i == 0:141 axes[i].set_title("Original Image", fontsize=14)142 else:143 axes[i].set_title("Match " + str(i) + ". Score: " + str(matches[i-1]), fontsize=14)144 plt.show()145 else:146 print("No match for this image as it was not inserted into the People Database")147 148upload_button = widgets.FileUpload()149display(upload_button)150upload_button.observe(handle_upload, names='value')151display(new_out)

9. Clean up

Action Required

If you created a new database in your Standard or Premium Workspace, you can drop the database by running the cell below. Note: this will not drop your database for Free Starter Workspaces. To drop a Free Starter Workspace, terminate the Workspace using the UI.

In [9]:

1shared_tier_check = %sql show variables like 'is_shared_tier'2if not shared_tier_check or shared_tier_check[0][1] == 'OFF':3 %sql DROP DATABASE IF EXISTS image_recognition;

Details

About this Template

Facial recognition using dot_product function on vectors stored in SingleStoreDB.

This Notebook can be run in Shared Tier, Standard and Enterprise deployments.

Tags

License

This Notebook has been released under the Apache 2.0 open source license.

See Notebook in action

Launch this notebook in SingleStore and start executing queries instantly.