Singlestore Now 2024 Raffle

Notebook

Note

This notebook can be run on a Free Starter Workspace. To create a Free Starter Workspace navigate to Start using the left nav. You can also use your existing Standard or Premium workspace with this Notebook.

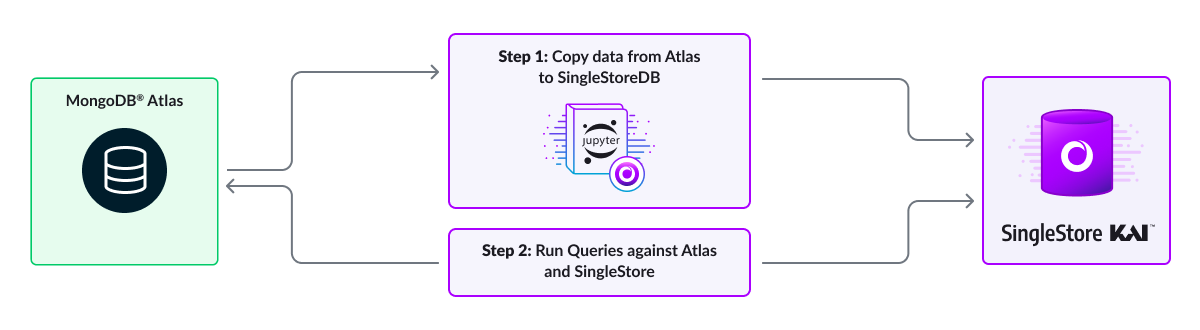

The data set used in this competition/demo contains some E-commerce data revolving around customers and products that they have purchased. In this notebook, we will run a few queries using SingleStore Kai which will allow us to migrate MongoDB data and run MongoDB queries directly through SingleStore. To create your entry for the raffle, please open and complete the following form: https://forms.gle/n8KjTpJgPL29wFHV9

If you have any issues while completing the form, please reach out to a SingleStore team member at the event.

Install libraries and import modules

First, we will need to import the necessary dependencies into our notebook environment. This includes some python libraries needed to run our queries.

In [1]:

1

!pip install pymongo pandas ipywidgets --quiet

To ensure that we have a database we can use, we will then make sure that a database exists. If it doesn't we will have the notebook create one for us.

In [2]:

1

shared_tier_check = %sql show variables like 'is_shared_tier'2

3

if shared_tier_check and shared_tier_check[0][1] == 'ON':4

current_database = %sql SELECT DATABASE() as CurrentDatabase5

database_to_use = current_database[0][0]6

else:7

database_to_use = "new_transactions"8

%sql CREATE DATABASE {{database_to_use}}

Next, let's run the code that will actually import the needed dependencies, including pymongo, that will be used to connect to SingleStore and our Mongo instance where the initial data is stored.

In [3]:

1

import os2

import time3

import numpy as np4

import pandas as pd5

import pymongo6

from pymongo import MongoClient

Connect to Atlas and SingleStore Kai endpoints

Next, we will connect to the MongoDB Atlas instance using a Mongo client. We will need to connect to this instance to get our initial data, currently stored in Mongo.

In [4]:

1

# No need to edit anything2

myclientmongodb = pymongo.MongoClient("mongodb+srv://mongo_sample_reader:SingleStoreRocks27017@cluster1.tfutgo0.mongodb.net/?retryWrites=true&w=majority")3

mydbmongodb = myclientmongodb["new_transactions"]4

mongoitems = mydbmongodb["items"]5

mongocusts = mydbmongodb["custs"]6

mongotxs = mydbmongodb["txs"]

Then, we will need to connect to the SingleStore Kai API which will allow us to import and access the Mongo data we will move over from Mongo Atlas.

In [5]:

1

db_to_use = database_to_use2

s2clientmongodb = pymongo.MongoClient(connection_url_kai)3

s2dbmongodb = s2clientmongodb[db_to_use]4

s2mongoitems = s2dbmongodb["items"]5

s2mongocusts = s2dbmongodb["custs"]6

s2mongotxs = s2dbmongodb["txs"]

Copy Atlas collections into SingleStore Kai

As our next step, we need to get our MongoDB data hosted in Atlas over to SingleStore. For this, we will run the following code that will then replicate the selected Mongo collections into our SingleStore instance. This will make the MongoDB data available in SingleStore, allowing us to migrate away from MongoDB and to perform all of our data storage and queries in a single database instead of having multiple data silos.

In [6]:

1

mongocollections = [mongoitems, mongocusts, mongotxs]2

3

for mongo_collection in mongocollections:4

df = pd.DataFrame(list(mongo_collection.find())).reset_index(drop=True)5

data_dict = df.to_dict(orient='records')6

s2mongo_collection = s2dbmongodb[mongo_collection.name]7

s2mongo_collection.insert_many(data_dict)

QUERY 1: Total quantity of products sold across all products

Our first query on the newly migrated data will be to retrieve the total quanitity of products across every product within our dataset. As you'll see, even though we are running in SingleStore, we can still use Mongo query syntax using SingleStore Kai.

In [7]:

1

num_iterations = 102

mongo_times = []3

4

# Updated pipeline for total quantity of products sold across all products5

pipeline = [6

{"$group": {"_id": None, "totalQuantity": {"$sum": "$item.quantity"}}}7

]8

9

# Simulating same for s2mongoitems10

s2_times = []11

for i in range(num_iterations):12

s2_start_time = time.time()13

s2_result = s2mongoitems.aggregate(pipeline)14

s2_stop_time = time.time()15

s2_times.append(s2_stop_time - s2_start_time)16

17

# Retrieving total quantity from the result18

total_quantity = next(s2_result)["totalQuantity"] if s2_result else 019

20

# Returning the numeric values of total quantity sold21

print("Total Product Quantity Sold is",total_quantity)

ACTION ITEM!

Take the output from this query and put it into the ANSWER NUMBER 1 field in the Google Form.

QUERY 2: Top selling Product

Our next query will be to find the top selling product within our data. Once again, we are issuing a Mongo query against our SingleStore instance. If we had an application integrated with MongoDB but wanted to migrate to SingleStore, we could do so without having to rewrite the queries within our application!

In [8]:

1

# Updated pipeline to return the #1 selling product based on total quantity sold2

pipeline = [3

{"$group": {4

"_id": "$item.name", # Group by product name5

"total_quantity_sold": {"$sum": "$item.quantity"} # Sum of quantities sold6

}},7

{"$sort": {"total_quantity_sold": -1}}, # Sort by total quantity sold in descending order8

{"$limit": 1} # Limit to the top product9

]10

11

s2_result = s2mongoitems.aggregate(pipeline)12

13

# Retrieve the name of the #1 selling product14

top_product = next(s2_result, None)15

if top_product:16

product_name = top_product["_id"]17

total_quantity_sold = top_product["total_quantity_sold"]18

else:19

product_name = "No Data"20

total_quantity_sold = 021

22

# Return the #1 selling product and its total quantity sold23

print("Top-Selling product : ",product_name,"With total quantity sold ",total_quantity_sold)

ACTION ITEM!

Take the output from this query and put it into the ANSWER NUMBER 2 field in the Google Form.

QUERY 3: Top selling Location

In [9]:

1

# Updated pipeline to exclude "Online" and get top-selling location2

pipeline = [3

{"$lookup":4

{5

"from": "custs",6

"localField": "customer.email",7

"foreignField": "email",8

"as": "transaction_links",9

}10

},11

{"$match": {"store_location": {"$ne": "Online"}}}, # Exclude Online location12

{"$limit": 100},13

{"$group":14

{15

"_id": {"location": "$store_location"},16

"count": {"$sum": 1}17

}18

},19

{"$sort": {"count": -1}},20

{"$limit": 1}21

]22

23

24

s2_result = s2mongotxs.aggregate(pipeline)25

26

27

# Retrieve the top-selling location excluding "Online"28

top_location = next(s2_result, None)29

if top_location:30

location_name = top_location["_id"]["location"]31

transaction_count = top_location["count"]32

else:33

location_name = "No Data"34

transaction_count = 035

36

# Return the top-selling location and transaction count37

38

print("Top-Selling Location : ",location_name,"With transaction of Count ",transaction_count)

ACTION ITEM!

Take the output from this query and put it into the ANSWER NUMBER 3 field in the Google Form.

Clean up and submit!

Make sure to click submit on your Google Form to make sure you've been entered into the SingleStore NOW 2024 raffle!

Additionally, if you'd like to clean up your instance, you can run the statement below. To learn more about SingleStore, please connect with one of our SingleStore reps here at the conference!

Action Required

If you created a new database in your Standard or Premium Workspace, you can drop the database by running the cell below. Note: this will not drop your database for Free Starter Workspaces. To drop a Free Starter Workspace, terminate the Workspace using the UI.

In [10]:

1

shared_tier_check = %sql show variables like 'is_shared_tier'2

if not shared_tier_check or shared_tier_check[0][1] == 'OFF':3

%sql DROP DATABASE IF EXISTS new_transactions;

Details

About this Template

"Explore the power of SingleStore in this interactive notebook by creating an account, loading data, and running queries for a chance to win the SignleStore Now 2024 Raffle!"

This Notebook can be run in Shared Tier, Standard and Enterprise deployments.

Tags

License

This Notebook has been released under the Apache 2.0 open source license.

See Notebook in action

Launch this notebook in SingleStore and start executing queries instantly.